Introduction to image classification models

These are notes from lesson 1 of Fast AI Practical Deep Learning for Coders.

Train an image classifier: see car classification notebook

1. Notes on Learning

There is a companion course focusing on AI ethics here

Thoughts on education: play the whole game first to get an idea of the big picture. Don’t become afflicted with “elementitis” getting too bogged down in details too early.

- Coloured cups - green, amber, red - for each student to indicate how well they are following

- Meta Learning by Radek Osmulski - a book based on the author’s experience of learning about deep learning (via the fastai course)

- Mathematician’s Lament by Paul Lockhart - a book about the state of mathematics education

- Making Learning Whole by David Perkins - a book about apporaches to holistic learning

RISE is a jupyter notebook extension to turn notebooks into slides. Jeremy uses notebooks for: source code, book, blogging, CI/CD.

2. Background on Deep Learning and Image Classification

Before deep learning, the approach to machine learning was to enlist many domain experts to handcraft features and feed this into a constrained linear model (e.g. ridge regression). This is time-consuming, expensive and requires many domain experts.

Neural networks learn these features. Looking inside a CNN, for example, shows that these learned features match interpretable features that an expert might handcraft. An illustration of the features learned is given in this paper by Zeiler and Fergus.

For image classifiers, you don’t need particularly large images as inputs. GPUs are so quick now that if you use large images, most of the time is spent on opening the file rather than computations. So often we resize images down to 400x400 pixels or smaller.

For most use cases, there are pre-trained models and sensible default values that we can use. In practice, most of the time is spent on the input layer and output layer. For most models the middle layers are identical.

3. Overview of the fastai learner

Data blocks structure the input to learners. An overview of the DataBlock class:

blocksdetermines the input and output type as a tuple. For multi-target classification this tuple can be arbitrary length.get_itemsis a function that returns a list of all the inputs.splitterdefines how to split the training/validation set.get_yis a function that returns the label of a given input image.item_tfmsdefines what transforms to apply to the inputs before training, e.g. resize.dataloadersis a method that parallelises loading the data.

A learner combines the model (e.g. resnet or something from timm library) and the data to run that model on (the dataloaders from the DataBlock).

The fine_tune method starts with a pretrained model weights rather than randomised weights, and only needs to learn the differences between your data and the original model.

Other image problems that can utilise deep learning:

- Image classification

- Image segmentation

Other problem types use the same process, just with different DataBlock blocks types and the rest is the same. For example, tabular data, collaborative filtering.

4. Deep Learning vs Traditional Computer Programs

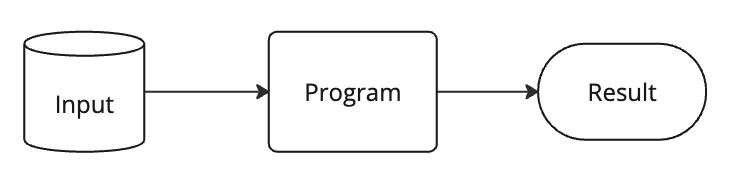

Traditional computer programs are essentially:

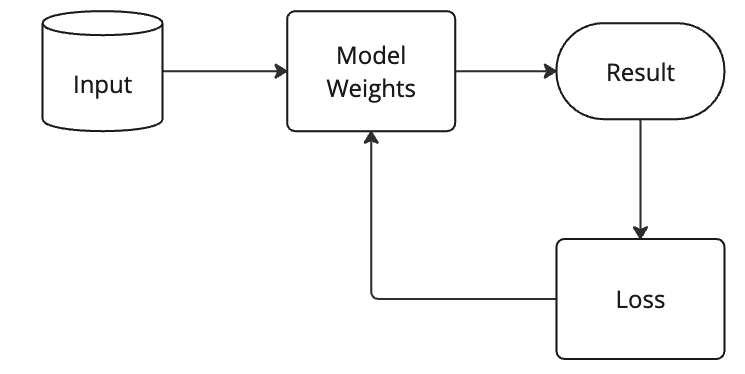

Deep learning models are: