1. Getting Started

1.1. AWS Global Infrastructure

Regions are geographic locations, e.g. europe-west-3, us-east-1, etc.

How should we choose a region?

- Compliance - data governance rules may require data within a certain location

- Proximity to reduce latency

- Available services vary by region

- Pricing varies by region

Each region can have multiple Availability Zones. There are usually between 3 and 6, e.g. ap-southeast-2a, ap-southeast-2b and ap-southeast-2c.

Each AZ contains multiple data centers with redundant power, networking and connectivity.

There are multiple Edge Locations/Points of Presence; 400 locations around the world.

1.2. Tour of the Console

Some services are global: IAM, Route 53, CloudFront, WAF

Most are region-scoped: EC2, Elastic Beanstalk, Lambda, Rekognition

The region selector is in the top right. The service selector in top left, or alternatively use search bar.

1.3. AWS Billing

Click on Billing and Cost Management in the top right of the screen.

This needs to first be activated for administrator IAM users. From the root account: Account (top right) -> IAM user and role access to billing information -> tick the Activate IAM Access checkbox.

- Bills tab - You can see bills per service and per region.

- Free Tier tab - Check what the free tier quotas are, and your current and forecasted usage.

- Budgets tab - set a budget.

Use a template -> Zero spend budget -> Budget name and email recipients. This will alert as soon as you spend any money. There is also a monthly cost budget for regular reporting.

2. IAM

2.1. Overview

Identity and access management. This is a global service.

The root account is created by default. It shouldn’t be used or shared; just use it to create users.

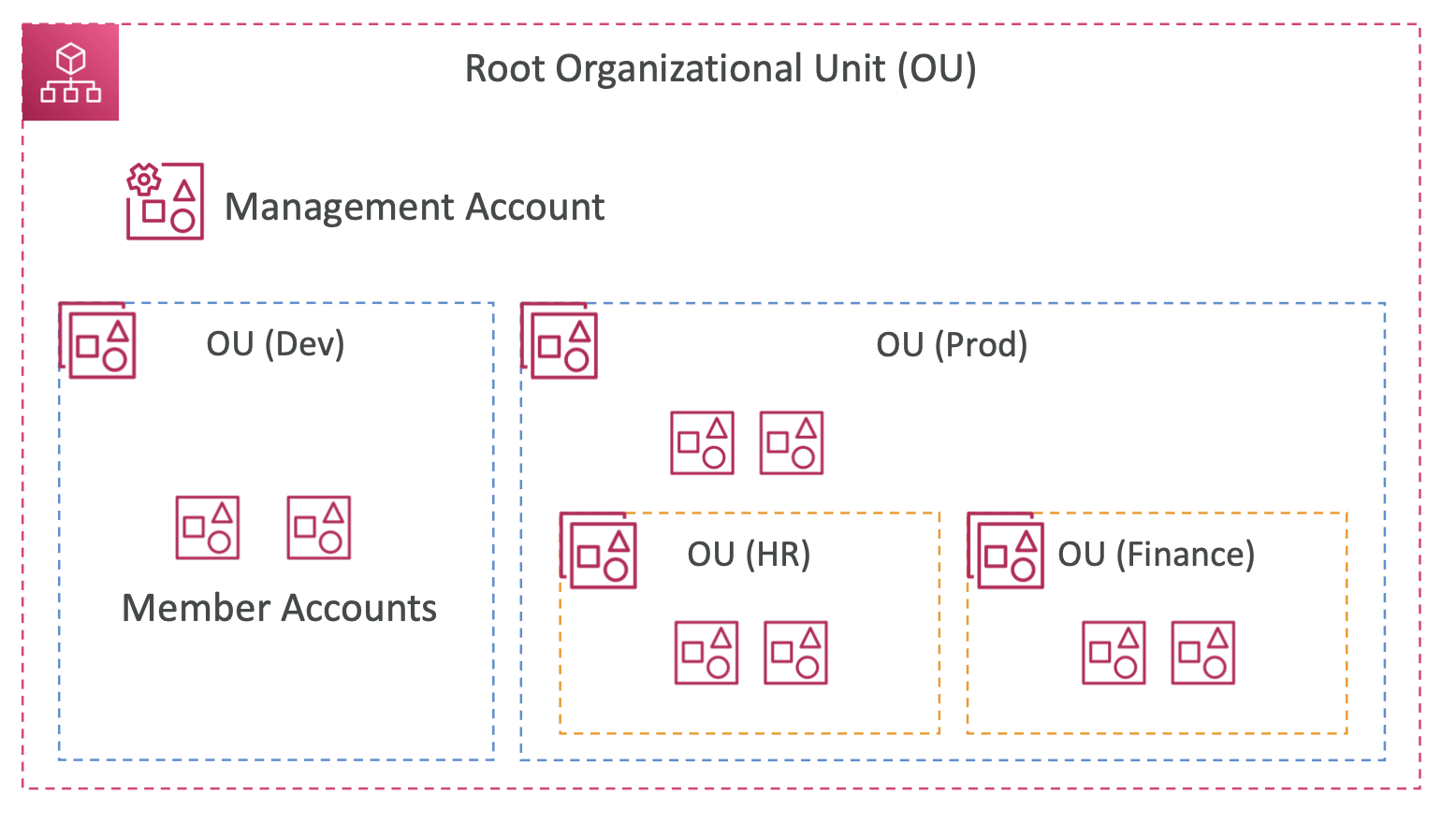

Users are people within the org and can be grouped. Groups cannot contain other groups. A user can belong to multiple groups (or none, but this is generally not best practice).

2.2. Permissions

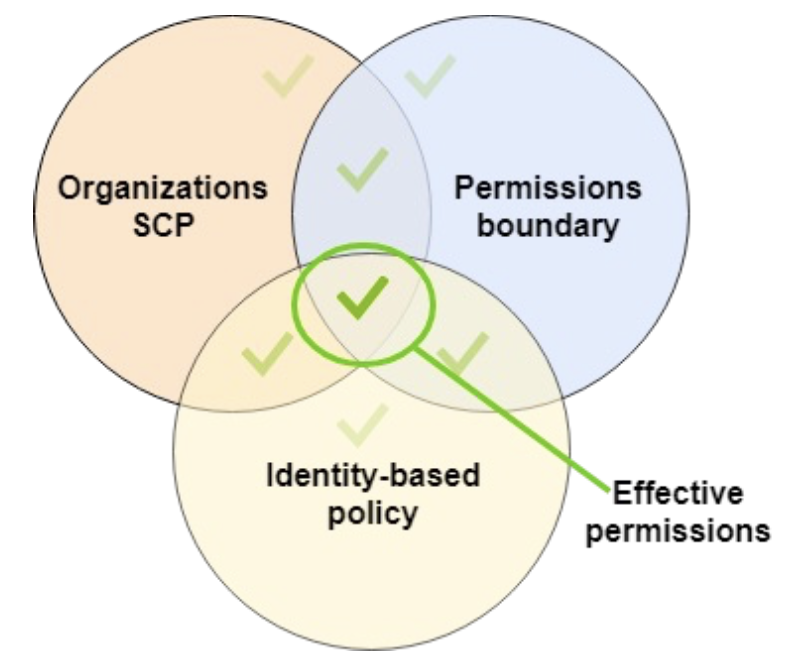

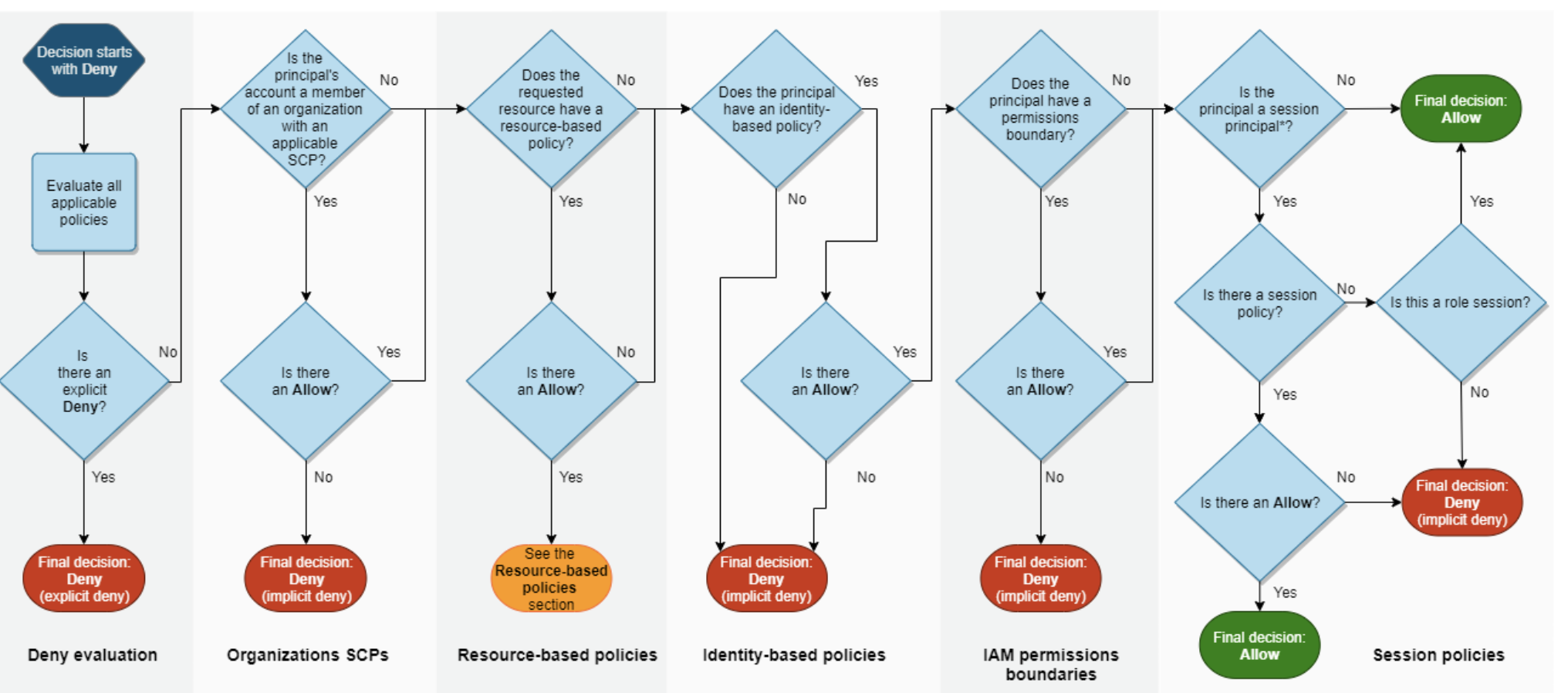

Users or groups can be assigned policies which are specified as a JSON document.

Least privilege principle means you shouldn’t give a user more permissions than they need.

2.3. Creating Users and Groups

In the IAM dashboard, there is a Users tab.

There is a Create User button. We give them a user name and can choose a password (or autogenerate a password if this is for another user).

Then we can add permissions directly, or create a group and add the user.

To create a group, specify the name and permissions policy.

Tags are optional key-value pairs we can add to assign custom metadata to different resources.

We can also create an account alias in IAM to simplify the account sign in, rather than having to remember the account ID.

When signing in to the AWS console, you can choose to log in as root user or IAM user.

2.4. IAM Policies

Policies can be attached to groups, or assigned as inline policies to a specific user. Groups are best practice.

Components of JSON document:

- Version: Policy language version (date)

- Id: Identifier for the policy

- Statement: Specifies the permissions

Each statement consists of:

- Sid: Optional identifier for the statement

- Effect: “Allow” or “Deny”

- Principal: The account/user/role that this policy applies to

- Action: List of actions that this policy allows or denies

- Resource: What the actions apply to, eg a bucket

- Condition: Optional, conditions when this policy should apply

“*” is a wildcard that matches anything.

2.5. MFA

Password policy can have different settings: minimum length, specific characters, password expiration, prevent password re-use.

Multi-factor authentication requires the password you know and the device you own to log in.

A hacker needs both to compromise the account.

MFA devices:

- Virtual MFA devices - Google Authenticator, Authy. Support for multiple tokens on a single device.

- Universal 2nd Factor Security Key (U2F) - eg YubiKey. Support for multiple root and IAM users on a single security key.

- Hardware key fob MFA device

- Hardware key fob MFA device for AWS GovCloud

2.6. Access Keys

There are 3 approaches to access AWS:

- Management console (web UI) - password + MFA

- Command line interface (CLI) - access keys

- Software Developer Kit (SDK) - access keys

Access keys are generated through the console and managed by the user. Access Key ID is like a username. Secret access key is like a password. Do not share access keys.

AWS CLI gives programmatic access to public APIs of AWS. It is open source. Configure access keys in the CLI using aws configure.

AWS SDK is for language-specific APIs.

2.7. AWS CloudShell

Access using the terminal icon in the toolbar next to the search bar.

This is an alternative to using your own terminal to access the AWS CLI. It is a cloud-based terminal.

You can pass --region to a command to run in a region other than the region selected in the AWS console.

CloudShell has a file system attached so we can upload and download files.

2.8. IAM Roles for Services

Some AWS services can perform actions on your behalf. To do so, they need the correct permissions, which we can grant with an IAM role.

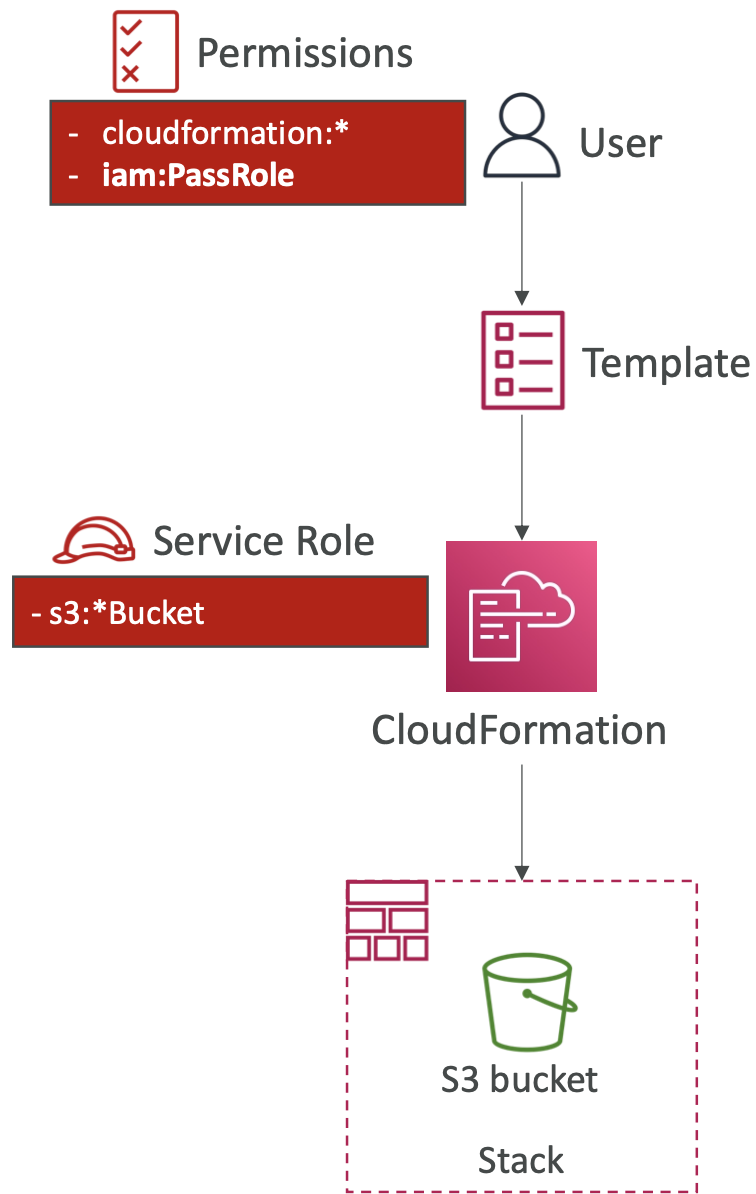

For example, EC2 instance roles, Lambda Function roles, CloudFormation roles.

In IAM, select Roles. Choose AWS Service and select the use case, e.g. EC2. Then we attach a permissions policy, such as IAMReadOnlyAccess.

2.9. IAM Security Tools

- IAM Credentials Report. Account-level report on all users and their credentials.

- IAM Access Advisor. User-level report on the service permissions granted to a user and when they were last accessed. This can help to see unused permissions to enforce principle of least privilege. This is in the Access Advisor tab under Users in IAM.

2.10 IAM Guidelines and Best Practices

- Don’t use root account except for account set up

- One physical user = One AWS user

- Assign users to groups and assign permissions to groups

- Create a strong password policy and use MFA

- Use Roles to give permissions to AWS services

- Use Access Keys for programmatic access via CLI and SDK

- Audit permissions using credentials report and access advisor

- Never share IAM users or access keys

2.11. Summary

- Users map to a physical user

- Groups contain users. They can’t contain other groups.

- Policies are JSON documents denoting the permissions for a user / group

- Roles grant permissions for AWS services like EC2 instances

- Security use MFA and password policy

- Programmatic use of services via CLI or SDK. Access keys manage permissions for these.

- Audit usage via credentials report or access advisor

3. EC2

3.1. EC2 Overview

Elastic Compute Cloud used for infrastructure-as-a-service.

Encompasses a few different use cases:

- Renting virtual machines (EC2)

- Storing data on virtual drives (EBS)

- Distributing load across machines (ELB)

- Scaling services using an auto-scaling group (ASG)

Sizing and configuration options:

- OS

- CPU

- RAM

- Storage - This can be network-attached (EBS and EFS) or hardware (EC2 Instance Store)

- Network Card - Speed of card and IP address

- Firewall rules - Security group

- Bootstrap script - Configure a script to run at first launch using and EC2 User Data script. This runs as the root user so has sudo access.

There are different instance types that have different combinations of the configuration options above.

3.2. Creating an EC2 Instance

- Specify a “name” tag for the instance and any other optional tags.

- Choose a base image. OS.

- Choose an instance type.

- Key pair. This is optional and allows you to ssh into your instance.

- Configure network settings. Public IP address, checkboxes to allow ssh access, http access

- Configure storage amount and type. Delete on termination is an important selection to delete the EBS volume once the corresponding EC2 instance is terminated.

- The “user data” box allows us to pass a bootstrap shell script.

- Check the summary and click Launch Instance.

The Instance Details tab tells you the Instance ID, public IP address (to access from the internet) and the private IP address (to access from within AWS).

We can stop an instance to keep the storage state of the attached EBS volume but without incurring any more EC2 costs. The public IP address might change it stopping and starting. The private IP address stays the same.

Alternatively, we can terminate it completely.

3.3. EC2 Instance Types

There are several families of instances: general purpose, compute-optimised, memory-optimised, accelerated computing, storage-optimised.

See the AWS website for an overview of all instances. There is also a handy comparison website here.

The naming convention is: \[ m5.large \]

mis the instance class5is the generation (AWS releases new versions over time)largeis the size within the class

The use cases for each of the instance types:

- General purpose is for generic workloads like web servers. Balance between compute, memory and networking.

- Compute-optimized instances for tasks that require good processors, such as batch processing, HPC, scientific modelling.

- Memory-optimized instances for large RAM, e.g. in-memory databases and big unstructured data processing.

- Storage-optimised instances for tasks that require reading and writing a lot of data from lcoal storage, e.g. high-frequency transaction processing, cache for in-memory databases, data warehouse.

3.4. Security Groups

Security groups control how traffic is allowed into or out of EC2 instances. They act as a “firewall” on EC2 instances.

Security groups only contain allow rules. Security groups can reference IP addresses or other security groups.

They regulate:

- Access to ports

- Authorised IP ranges (IPv4 and IPv6)

- Inbound and outbound network

By default, any inbound traffic is blocked and any outbound traffic is allowed.

A security group can be attached to multiple instances. It is locked down to a (region, VPC) combination.

The security group exists “outside” of the EC2 instance, so if traffic is blocked then the instance will never see it.

- Any time you get a timeout when trying to access your EC2 instance, it’s almost always a result of the security rule.

- If the application gives “connection refused” then it’s an application error.

- It can be helpful to keep a security group specifically for SSH access

Access security groups under:

EC2 -> Network & Security -> Security GroupsWe can set the type of connection, the port and the IP address/range.

A security group can reference other security groups, i.e. “allow traffic from any other EC2 instance which has Security Group A or Security Group B attached to it”. This saves us from having to reference IP addresses all the time, which can be handy when these are not static.

Typical ports to know:

- 21 - FTP, file transfer protocol

- 22 - SSH or SFTP (because SFTP uses SSH), secure shell and secure FTP

- 80 - HTTP, access unsecured websites

- 443 - HTTPS, access secured websites

- 3389 - RDP, Remote Desktop Protocol, SSH equivalent for Windows

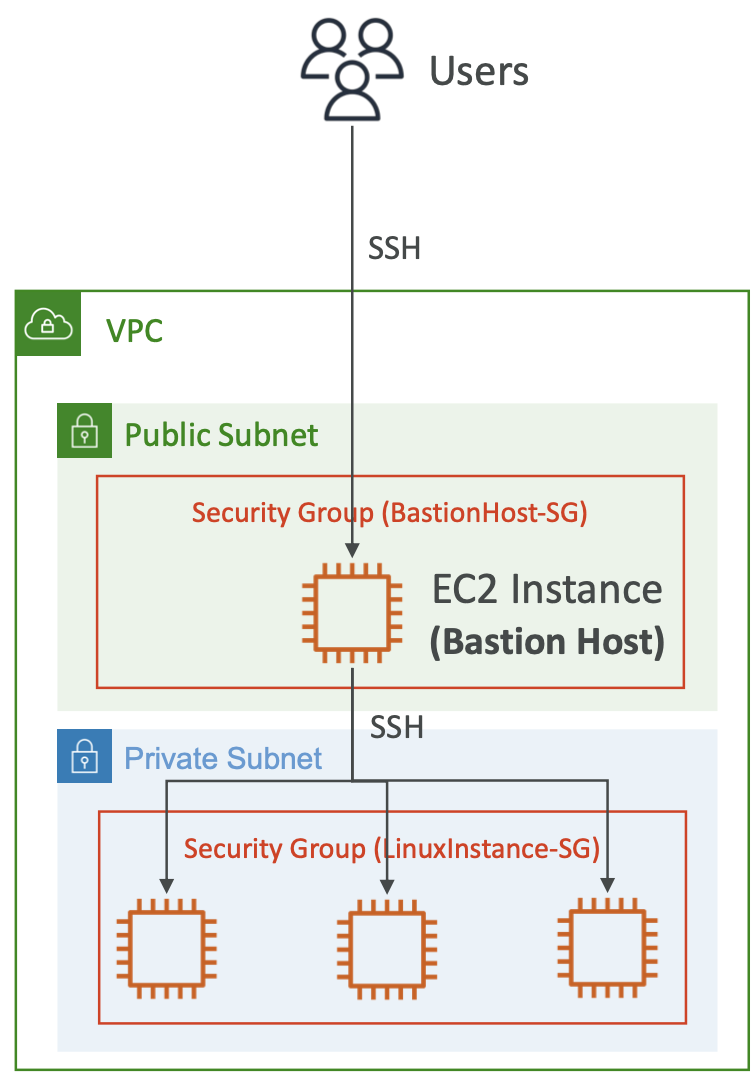

3.5. Connecting to Instances

SSH works for Linux, Mac or Windows > 10. Putty works for all versions of Windows. EC2 Instance Connect works for all operating systems.

3.5.1. Linux via SSH

SSH allows you to control a remote machine using the command line.

You need you pem / ppm file for your secure keys. The EC2 instance needs to allow inbound connections for SSH access.

ssh EC2-<username>@<public IP address>We can pass the file path for the key with the argument -i path/to/file.pem

3.5.2. EC2 Instance Connect

This opens a terminal in browser. No security keys are required since it generates temporary keys.

This relies on SSH behind the scenes, so the correct security groups for SSH need to be allowed on the EC2 instance.

Use the EC2 Instance Connect tab in the EC2 section for your running instance.

3.6. EC2 Instance Roles

Never enter your IAM details on an EC2 instance as this would be available to anybody else who can access the instance. Poor security practices!

Instead, we use EC2 instance roles.

In the tab for the instance tab, we can do this with:

Action -> Security -> Modify IAM RoleThen select a role to attach to the instance.

3.7. EC2 Instance Purchase Options

3.7.1. Purchase Options

More common: - Spot: short workloads, cheap but can be terminated. Not suitable for critical jobs or databases. - On-demand: short uninterrupted workloads, pay per second - Reserved: long workloads like a database. 1 or 3 years. Convertible reserved instances allow you to change the instance type over the reserved period. - Savings plan: 1 to 3 year commitment to an amount of USAGE rather than committing to a specific instance size or OS.

Less common: - Dedicated hosts: book an entire physical server and control instance placement. Most expensive. Useful to meet compliance requirements, or where you have Bring Your Own Licence (BYOL) software. - Dedicated instances: no other customers share your hardware. No control over instance placement, so the physical hardware might move after stopping and starting. May share hardware with other instances in the same account. - Capacity reservations: reserve capacity in your AZ for any duration. No time commitment and no billing discounts. You’re charged on demand rates whether you run the instance or not. Suitable for short term interrupted workloads that need to be in a specific AZ.

3.7.2. IPv4 Charges

There is a $0.005 per hour charge for all public IPv4 in your account.

There is a free tier for the EC2 service. There is no free tier for any other service.

There is no charge for IPv6 addresses. But this does not work for all ISPs.

AWS Public IP Insights Service under Billing is helpful to see these costs.

3.7.3. Spot Instances

Discount of up to 90% compared to on demand instances.

You define the max spot price you are willing to pay, and you get the instance for as long as the current price is less than your max price. The hourly spot price varies by offer and capacity. If the current price rises above your max, you have a 2 minute grace period to stop or terminate your instance.

“Spot Block” is a strategy to block a spot instance for a specified time frame (1-6 hours). They are no longer available but could potentially still come up on the exam.

A spot request consists of:

- Max price

- Desired number of instances

- Launch specification - AMI

- Request type: one-time or persistent. A persistent request will automatically request more spot instances whenever any are terminated, for as long as the spot request is valid.

- Valid from and until

Spot Instance Requests can only be cancelled if they are open, active or disabled. Canceling a spot request does not terminate the instance. You need to cancel the request then terminate the instance, to ensure a persistent request does not launch another.

3.7.4. Spot Fleets

A spot fleet is a set of spot instances + optional on-demand instances.

It will try to meet the target capacity within the price constraints. You specify the launch pool: instance type, OS and availability zone. You can have multiple launch pools so the fleet can choose the best. It will stop launching instances either when it reaches target capacity or max cost.

There are several strategies for allocating spot instances:

- lowestPrice: from the pool with lowest price

- diversified: distributed across pools for better availability

- capacityOptimized: from the pool with optimal capacity

- priceCapacityOptimized (recommended): pool with highest capacity first, then lowest price

4. EC2 Networking

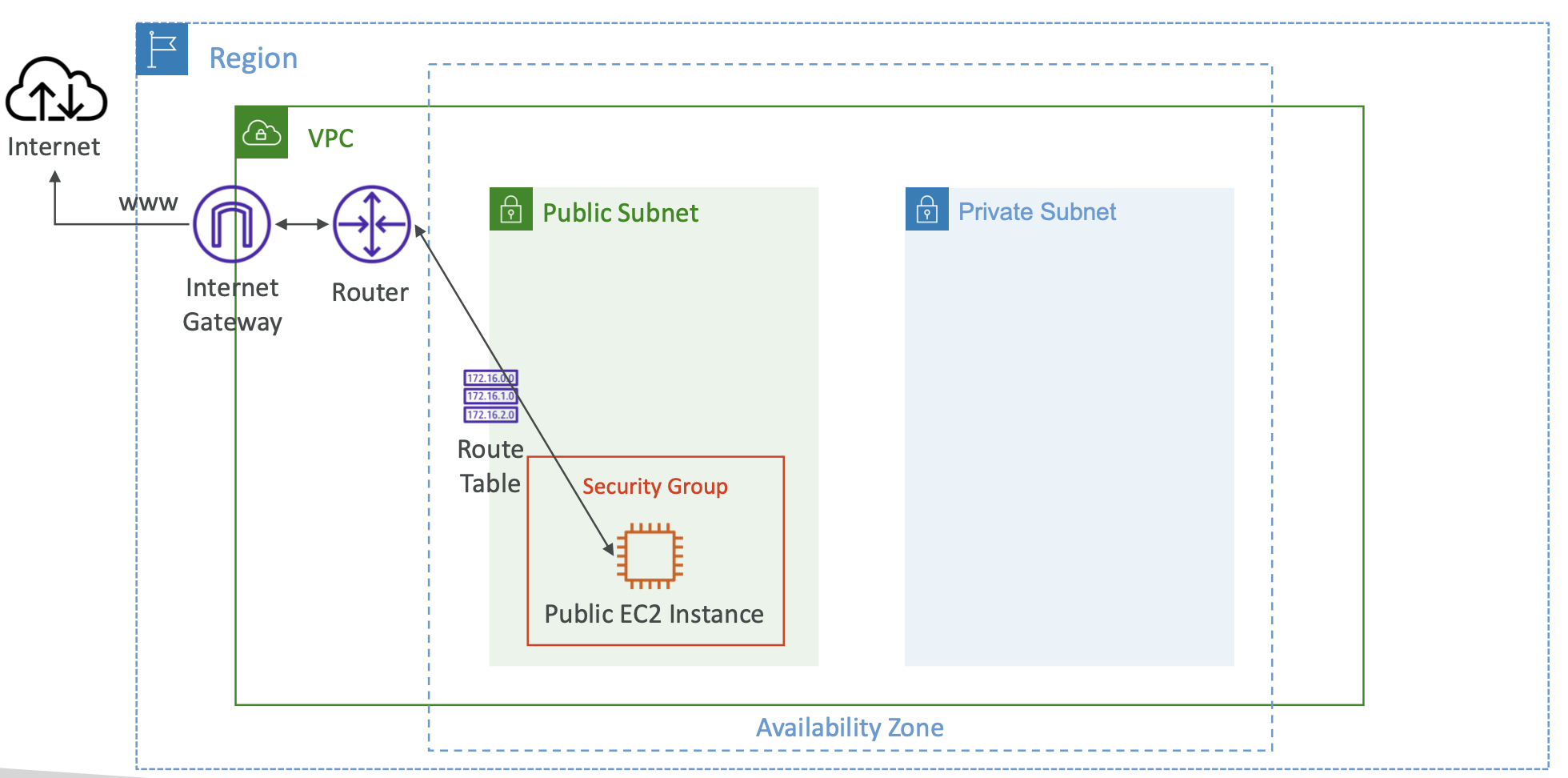

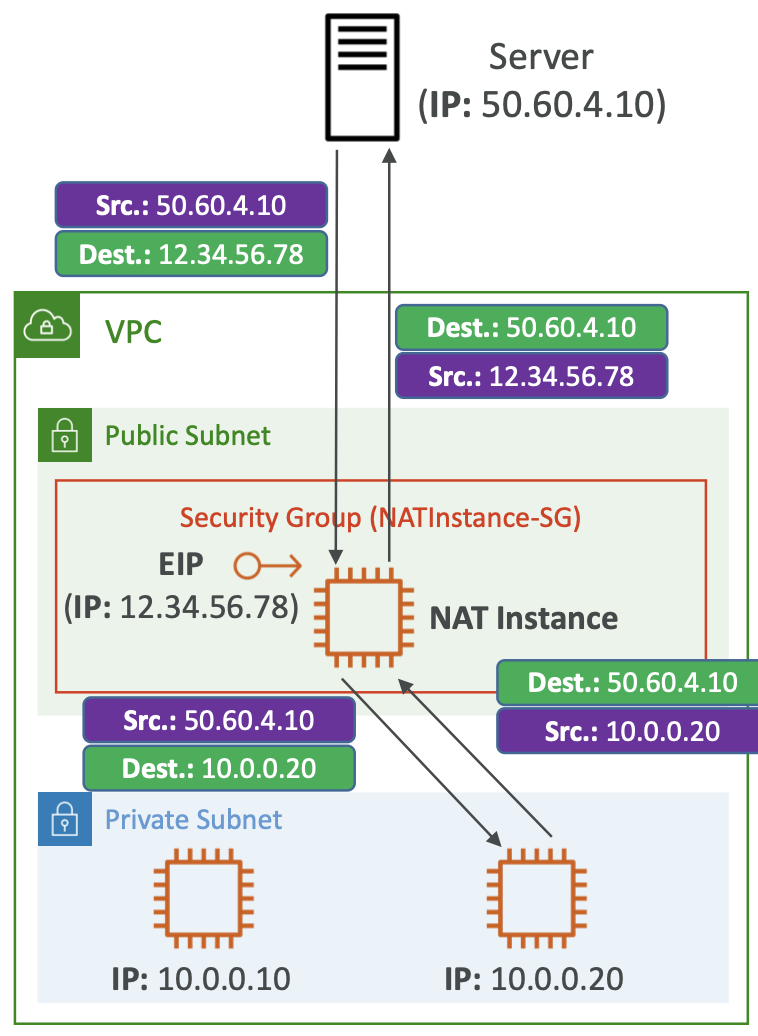

4.1. Private vs Public IP

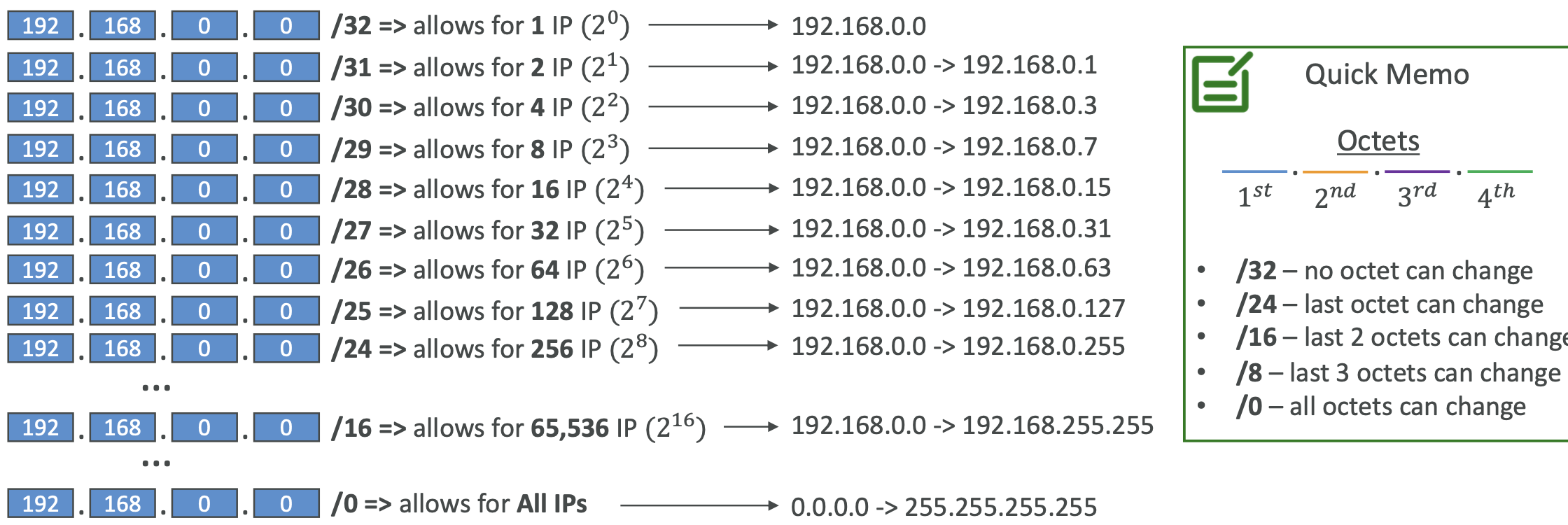

There are two types of IP in networking: IPv4 and IPv6. v4 is most commonly used, v6 is for IoT.

Public IP means the machine can be identified on the internet. It must be unique across the whole web.

Private IP means the machine can only be located on the private network. It must be unique across that private network. Only a specified range of IP addresses can be used as private addresses. Machines connect to the internet using an internet gateway (a proxy).

4.2. Elastic IP

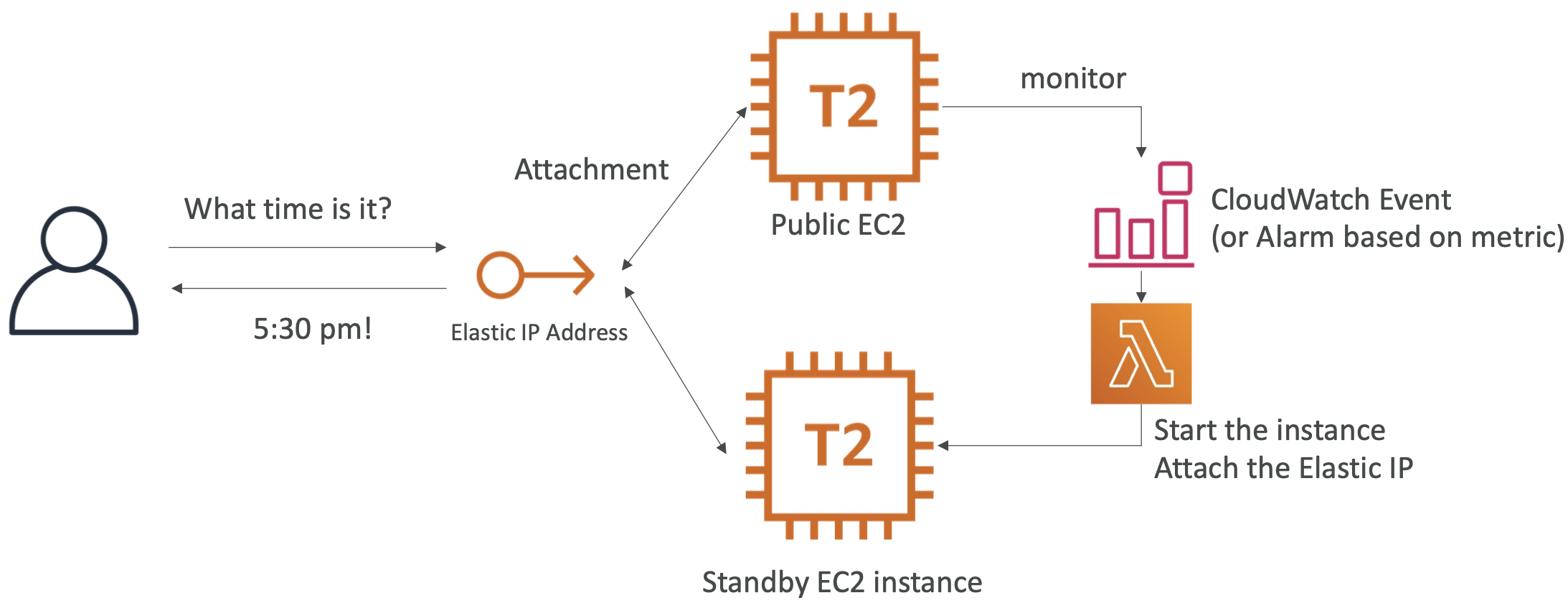

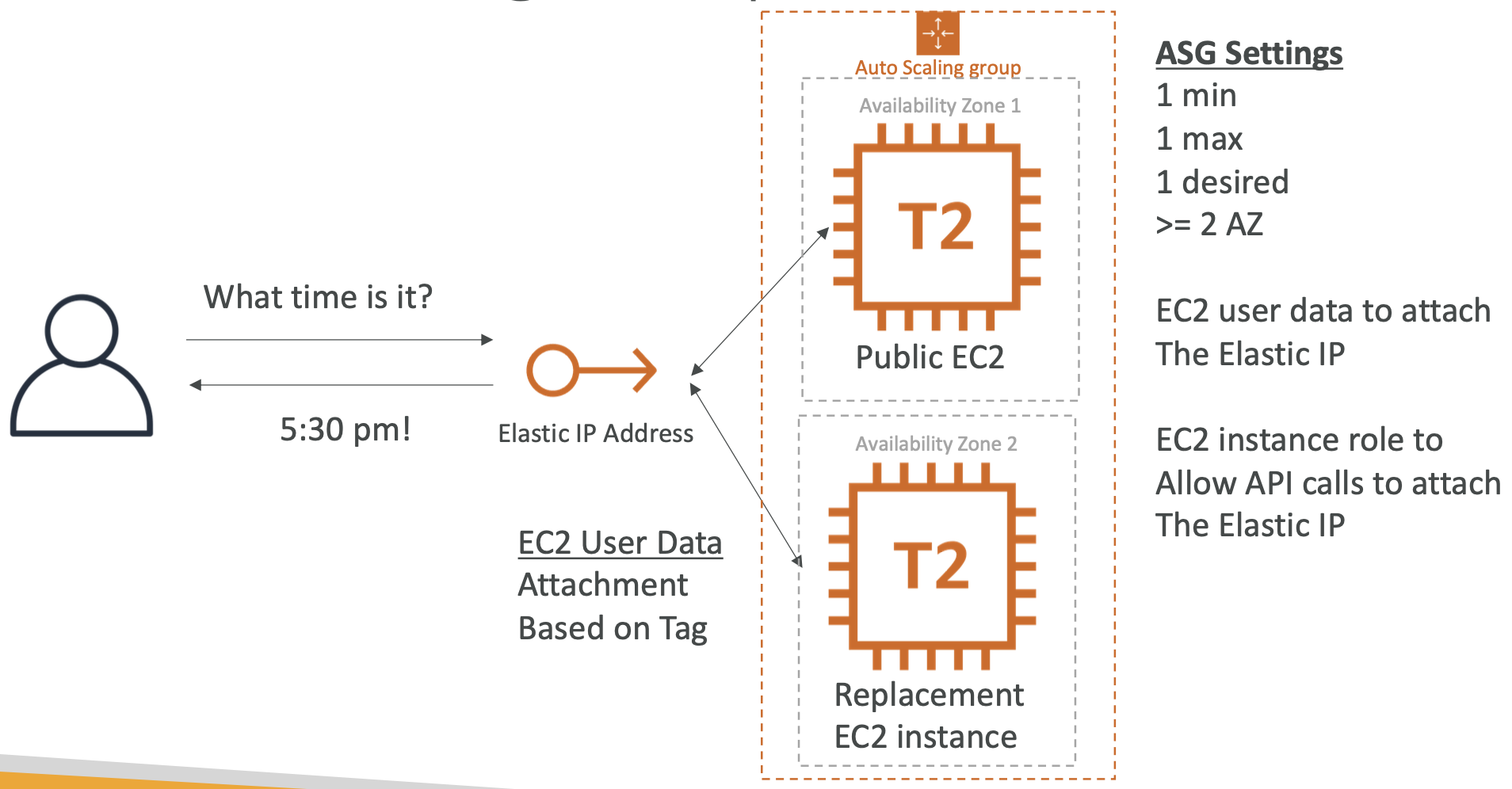

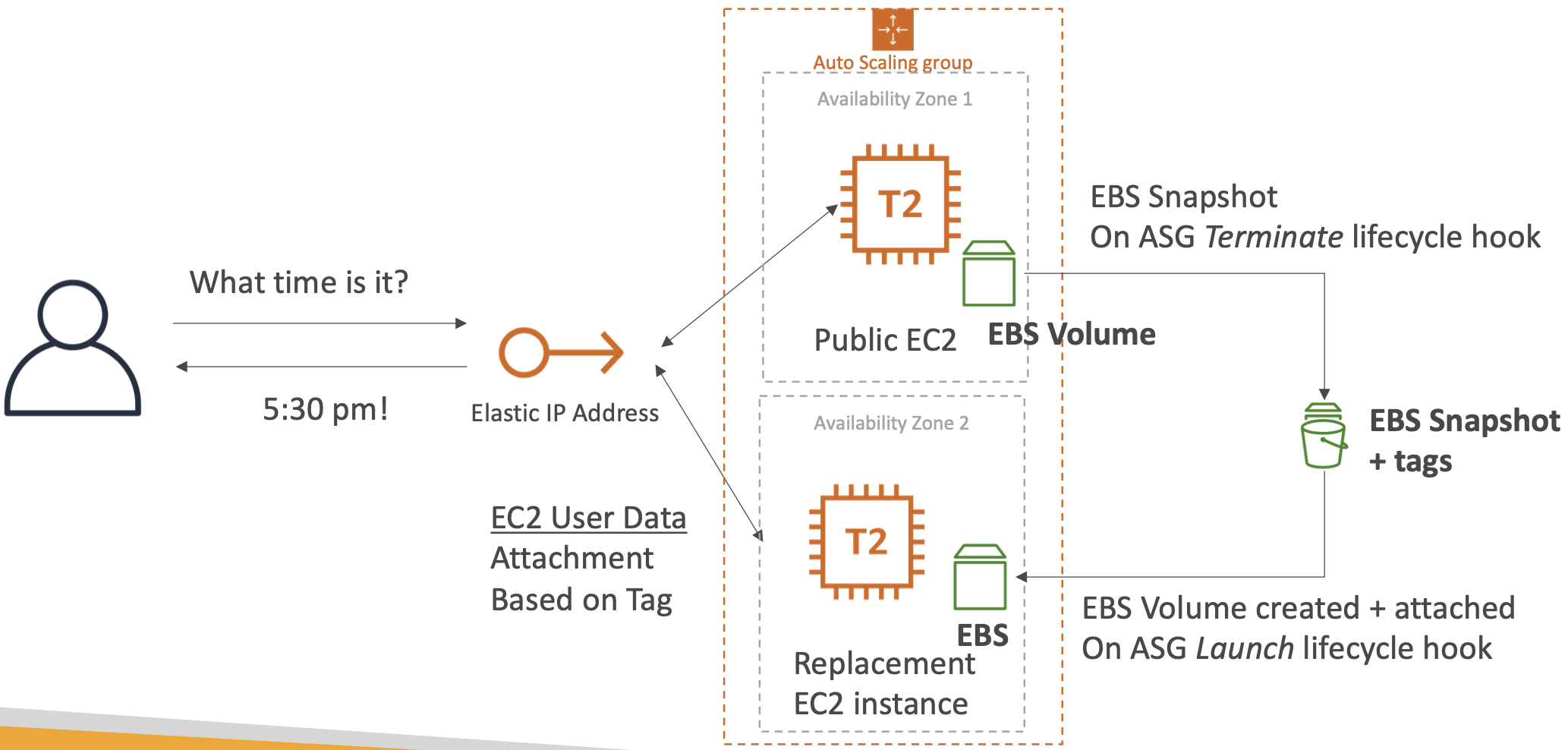

When you start and stop an EC2 instance it can change its IP. If you need this to be fixed, you can use elastic IP which will get reused for future instances if that one gets terminated. You can only have 5 elastic IP addresses in your account.

It is best practice to avoid elastic IP addresses as they often are a symptom of bad design choices. Instead, use a random public IP and register a DNS name to it. Or alternatively, use a load balancer and don’t use a public IP.

4.3. Placement Groups

Placement groups allow you to control the EC2 instance placement strategy. You don’t get direct access to / knowledge of the hardware, but you can specify one of three strategies:

- Cluster - cluster instances into a low latency group in a single AZ. High performance but high risk; low latency and high bandwidth. Useful for big data jobs that need to complete quickly.

- Spread - spread instances across different hardware (max 7 instances per AZ). Useful for critical applications as the risk of all instances simultaneously failing is minimised. But the max instance count limits the size of the job.

- Partition - Spread instances across many different sets of partitions within an AZ. Each partition represents a physical rack of hardware. Max 7 partitions per AZ, but each partition can have many instances. Useful for applications with hundreds of instances or more, like Hadoop.

Creating a Placement Group

To create a placement group:

EC2 -> Network & Security -> Placement Groups -> Create Placement GroupGive it a name, e.g. my-critical-group, then select one of the three strategies.

To launch an instance in a group:

Click Launch Instances -> Advanced Settings -> Placement Group Name4.4. Elastic Network Interfaces

4.4.1. What is an ENI?

Elastic Network Interfaces (ENIs) represent a virtual network card in a VPC. They are bound to a specific AZ.

Each ENI can have the following attributes:

- One private IPv4, plus one or more secondary IPv4 addresses

- One Elastic IPv4 per private IPv4

- One public IPv4

- One or more security groups

- One MAC address

An ENI can be created and then attached to EC2 instances on the fly. This makes them useful for failover, as the ENI from the failed instance can be moved to its replacement to keep the IP addresses consistent.

Another use case is for deployments. We have the current version of the application running on instance A with an ENI. This is accessible by its IP address. Then we can run the new version of the application of instance B. When we are ready to deploy, move the ENI to instance B.

4.4.2. Creating an ENI

Click on the Instance in the UI and see the Network Interfaces section.

Under the Network & Security tab we can see Network Interfaces. We can create ENIs here.

Specify: description, subnet, Private IPv4 address (auto assign), attach a Security Group.

4.4.3 Attaching an ENI to an Instance

On the Network Interfaces UI, Actions -> Attach. Select the instance.

More on ENIs: https://aws.amazon.com/blogs/aws/new-elastic-network-interfaces-in-the-virtual-private-cloud/

4.5. EC2 Hibernate

4.5.1. Why Hibernate?

When we stop an instance, the data on disk (EBS) is kept intact until the next start. When we start it again, the OS boots up and runs the EC2 User Data script. This can take time.

EC2 Hibernate is a way of reducing boot time. When the instance is hibernated, the RAM state is saved to disk (EBS). When the instance is started again, it loads the RAM state from disk. This avoids having to boot up and initialise the instance from scratch.

Use cases:

- Long-running processing

- Services that take time to initialise

An instance can not be hibernated for more than 60 days. The instance RAM size must be less than 150GB and the EBS root volume large enough to store it.

4.5.2. Enable Hibernation on an Instance

We can enable hibernation when creating an instance under Advanced Details, there is a “Stop - Hibernate Behaviour” drop down that we can enable.

Under Storage, the EBS volume must be encrypted and larger than the RAM.

To then hibernate a specific instance, on the Instance Summary select Instance State -> Hibernate Instance.

5. EC2 Instance Storage

5.1. EBS

5.1.1. What is an EBS Volume?

An Elastic Block Storage (EBS) volume is a network drive that you can attach to your instance. Think of it like a “cloud USB stick”. They allow us to persist data even after an instance is terminated. EBS volumes have a provisioned capacity: size in GB and IOPS.

Each EBS volume can only be mounted to one EC2 instance at a time, and are bound to a specific AZ. To move a volume across an AZ, you need to snapshot it. Each EC2 instance can have multiple EBS volumes.

There is a “delete on terminate” option. By default, this is on for the root volume but not any additional volumes. We can control this in the AWS console or CLI.

5.1.2. Creating an EBS Volume on an Instance

We can see existing volumes under

EC2 -> Elastic Block Store -> VolumesWe can select Create Volume. We then choose volume type, size, AZ (same as instance).

This makes the volume available. We can then attach the volume using Actions -> Attach Volume. The Volume State will now be “In-use” instead of “Available”.

5.1.3. EBS Snapshots

A snapshot is a backup of your EBS volume at a point in time.

It is recommended, but not necessary, to detach the volume from an instance.

We can copy snapshots across AZ and regions.

Features:

- EBS Snapshot Archive. Moving the snapshot to an archive tier is much cheaper (75%) but then takes 24-72 hours to restore.

- Recycle bin. There are setup rules to retain deleted snapshots so they can be restored after deletion. Retention period is 1 day to 1 year.

- Fast snapshot restore (FSR). Force full initialisation of snapshot to have no latency. This costs more.

5.1.4. EBS Features Hands On

Create an EBS volume:

Elastic Block Store UI, Actions -> Create Snapshot -> Add a descriptionSee snapshots:

EBS -> Snapshots tabCopy it to another region:

Right-click volume -> Copy Snapshot -> Select the description and destination regionRecreate a volume from a snapshot:

Select the snapshot -> Actions -> Create Volume From Snapshot -> Select size and AZArchive the snapshot:

Select the volume -> Actions -> Archive SnapshotRecover a snapshot after deletion:

Recycle Bin -> Select the snapshot -> Recover5.1.5. EBS Volume Types

EBS volumes are characterised by: size, throughput and IOPS.

Types of EBS volumes:

- gp2/gp3 - General purpose SSD. The newer gp3 options allow size and IOPS to be varied independently, for the older gp2 types they were linked.

- io1/io2 Block Express - High throughput low latency SSD. Support EBS Multi Attach.

- st1 - Low cost HDD for frequently accessed data.

- sc1 - Lowest cost HDD for infrequently accessed data.

Only the SSD options can be used as boot volumes.

5.1.6. EBS Multi Attach

Attach the same EBS volume to multiple EC2 instances (up to 16) in the same AZ.

Only available for io1 and io2 EBS volume types. You must use a file system that is cluster-aware.

For use cases with higher application availability in clustered applications, or where applications must manage concurrent write operations.

5.1.7. EBS Encryption

When you create an encrypted EBS volume you get:

- Data at rest is encrypted inside the volume

- Data in flight is encrypted between the volume and the instance

- Snapshots are encrypted

- Volumes created from the snapshot are encrypted

Encryption and decryption is all handled by AWS. The latency impact is minimal. It uses KMA (AES-256) keys.

Copying an unencrypted snapshot allows encryption:

- Create an EBS snapshot of the volume.

- Encrypt the snapshot using copy.

- Create a new EBS volume from the snapshot. This will be encrypted.

- Attach the encrypted volume to the original instance.

5.2. AMI

Amazon Machine Image (AMI) is the customisation of an EC2 instance. It defines the OS, installed software, configuration, monitoring, etc. AMIs are built for a specific region.

Putting this in the AMI rather than the boot script results in a faster boot time since the software is prepackaged.

We can launch EC2 instances from:

- A public AMI (provided by AWS)

- An AMI from the AWS Marketplace (provided by a third-party)

- Your own AMI

Create an AMI from a running instance that we have customised to our liking:

Right-click the instance -> Images and Templates -> Create ImageSee the AMI:

EC2 UI -> Images -> AMIsLaunch an instance from an AMI

Select the AMI -> Launch Instance from AMI5.3. EC2 Instance Store

EBS volumes are network drives, which gives adequate but potentially slow read/write.

EC2 Instance Store is a physical disk attached to the server that is running the EC2 instance.

They give better I/O performance but are ephemeral, the data is lost if the instance is stopped or the hardware fails.

Good for cache or temporary data.

5.4. Elastic File System (EFS)

5.4.1. What is EFS?

EFS is a managed Network File System (NFS) that can be mounted on multiple EC2 instances. The EC2 instances can be in multiple AZs.

Highly available, scalable, but more expensive. It scales automatically and you pay per GB. You don’t need to plan the capacity in advance.

A security group is required to control access to EFS. It is compatible with Linux AMIs only, not Windows. It is a POSIX (Linux-ish) file system with the standard API.

Uses cases: content management, web serving, data sharing, Wordpress.

5.4.2. Performance Modes

- EFS Scale - This gives thousands of concurrent NFS clients for >10GB/s of throughput.

- Performance modes - This can be set to general purpose for latency-sensitive use cases, or Max I/O for higher throughput at the expense of higher latency.

- Throughput mode - This can be set to bursting which scales throughput with the total storage used, provisioned which sets a fixed throughput, or elastic which scales throughput depending on the demand (ie the requests received)

5.4.3. Storage classes

Storage tiers are a lifecycle management feature to move files to cheaper storage after N days. You can implement lifecycle policies to automatically move files between tiers based on the number of days since it was last accessed.

- Standard. For frequently accessed files.

- Infrequent access (EFS-IA). There is a cost to retrieve files, but lower cost to store.

- Archive. For rarely accessed data.

There are two different availability options:

- Regional. Multi-AZ within a region, good for production.

- One zone. Only one AZ with backup enabled by default. Good for dev.

5.5. EBS vs EFS

EBS volumes are attached to one instance (mostly, apart from multi-attach) and are locked at the AZ level.

EFS can be mounted to hundreds of instances across AZs. It is more expensive, but storage tiers can help reduce this.

Instance Store is attached to a specific instance, and is lost when that instance goes down.

6. ELB and ASG

6.1. Scalability and Availability

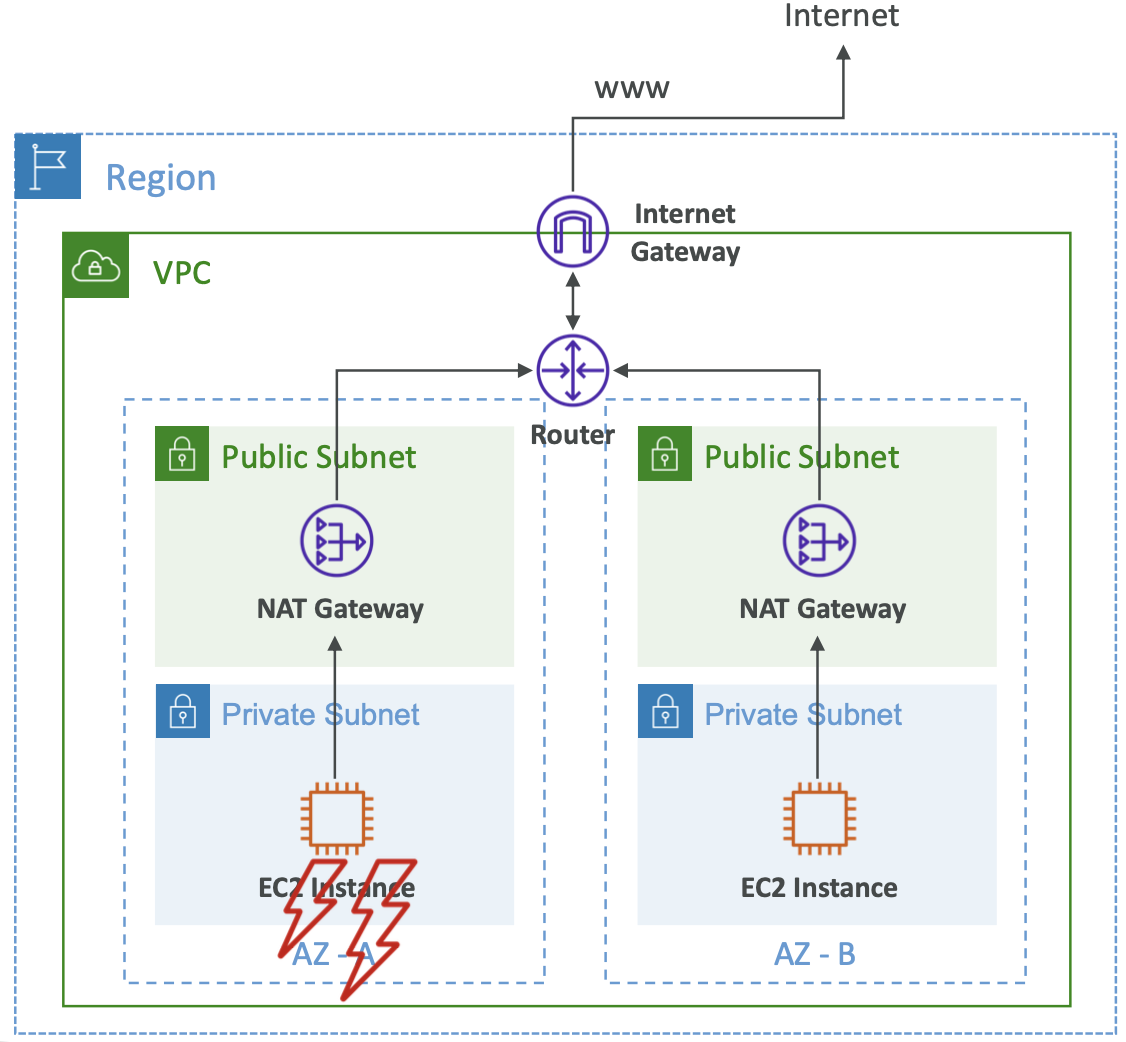

Scalability means an application can adapt to handle greater loads.

- Vertical scalability. Increase the size of a single instance. The scaling limit is often a hardware limit. “Scale up and down”.

- Horizontal scalability. Also called elasticity. Distribute across more instances. “Scale out and in”.

High availability is the ability to survive a data center loss. This often comes with horizontal scale. Run the application across multiple AZs.

6.2. ELB

6.2.1. Load balancing

Load balancers are servers that forward traffic to multiple servers downstream.

Benefits:

- Spread the load across downstream instances

- Perform health checks on instances and handle downstream failures

- Expose a single point of access (DNS) to your application

- Provide SSL termination

- Separate public traffic from private traffic

- High availability across zones

Elastic Load Balancer (ELB) is a managed load balancer.

Health checks are done on a port and route, and return a 200 status if it can be reached.

There are four kinds of managed load balancer:

- Classic load balancer (Deprecated)

- Application load balancer

- Network load balancer

- Gateway load balancer

Some can be set up as internal (private) or external (public).

6.2.2. Security Groups

Users can connect via HTTP/HTTPS from anywhere. So the security groups typically allow inbound TCP connections on ports 80 and 443.

The security groups for the downstream EC2 instances then only needs to allow inbound connections from the load balancer, i.e. one specific source. This means we can forbid users from connecting directly to the instance and force them to go via the load balancer.

6.2.3. Application Load Balancer (ALB)

These are layer 7 load balancers, meaning they take HTTP requests. They support HTTP/2 and WebSocket, and can also redirect from HTTP to HTTPS.

You get a fixed hostname, i.e. XXX.region.elb.amazonaws.com. This is helpful to get a fixed IP to connect to instances which are being created and destroyed, where IP addresses are normally changing constantly. The application servers don’t see the IP of the client directly, but this is inserted as a header X-forwarded-for. We also headers for the port and protocol.

Use cases are microservices and container-based applications (e.g. ECS). One load balancer can route traffic between multiple applications. There is a port mapping feature to redirect to a dynamic port in ECS.

They can route requests to multiple HTTP applications across machines (called target groups) or multiple applications on the same machine (e.g. containers).

Routing options:

- By path in URL - e.g.

/usersendpoint,/blogendpoint - By hostname in URL - e.g.

one.example.comandtwo.example.com - By query string headers - e.g.

/id=123&orders=True

ALB can route to multiple target groups. Health checks are at the target group level. Target groups can be:

- EC2 instances

- ECS tasks

- Lambda functions

- Private IP addresses

6.2.4. Network Load Balancer (NLB)

These are layer 4 load balancers, meaning they route TCP and UDP traffic. Ultra-low latency and can handle millions of requests per second. NLB has one static IP per AZ.

Target groups can be:

- EC2 instances

- Private IP addresses

- Application load balancers. You may want the NLB for a static IP, routing to an ALB for the http routing rules.

Health checks support TCP, HTTP and HTTPS protocols.

6.2.5. Gateway Load Balancer (GWLB)

This is a layer 3 load balancer, meaning it routes IP packets.

This is useful when we want to route traffic via a target group of a 3rd party network virtual appliance (e.g. a firewall) before it reaches our application.

User traffic > GWLB > Firewall > GWLB > Our application It uses the GENEVE protocol on port 6081.

Target groups can be:

- EC2 instances

- Private IP addresses

6.2.6. Sticky Sessions

Stickiness means a particular client is always routed to the same instance behind the load balancer. This means the user doesn’t lose their session data. It does this via a cookie which has an expiration date.

Overusing sticky sessions can result in imbalanced loads, since they’re constraining the load balancer to direct traffic to instances that may not be optimal.

Application-based cookies. Two options for this: - A custom cookie is generated by the target. The cookie name must be specified for each target group and cannot be one of the reserved keywords: AWSALB, AWSALBAPP, AWSALBTG. - An application cookie is generated by the load balancer. The cookie name is always AWSALBAPP.

Duration-based cookies. This is generated by the load balancer. The cookie name is always AWSALB for ALB (or AWSELB for CLB).

ELB UI -> Target Groups -> Select a target group -> Edit Target Group

-> Turn On Stickiness -> Select cookie type and duration6.2.7. Cross-Zone Load Balancing

With cross-zone load balancing, each load balancer will distribute requests evenly across all registered instances in all AZs, regardless of which zone the request came from.

Without cross-zone load balancing, there can be big disparities between load in different AZs.

Cross-zone load balancing is enabled by default for ALB and CLB, and won’t charge for data transfer between AZs. It is disabled by default for NLB and GWLB. These will charge for inter-AZ data transfer if you enable it.

6.2.8. Connection Draining

The load balancer allows time to complete “in-flight requests” while the instance is registering or unhealthy. The load balancer stops sending new requests to the EC2 instance which is de-registering.

The is called connection draining for CLB, and deregistration delay for ALB and NLB.

It can be 0-3600 seconds. By default it is 300 seconds. Disable connection draining by setting it to 0.

6.3. SSL Certificates

6.3.1. SSL and TLS

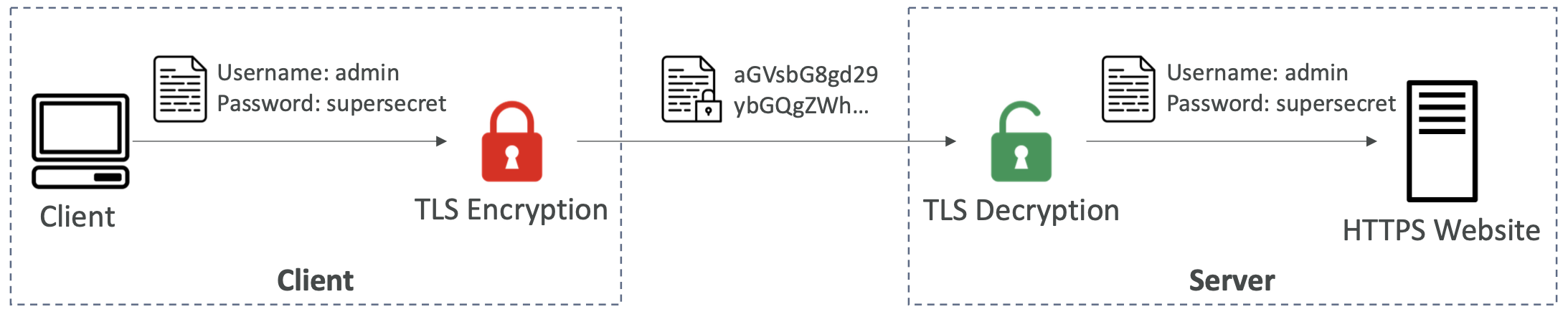

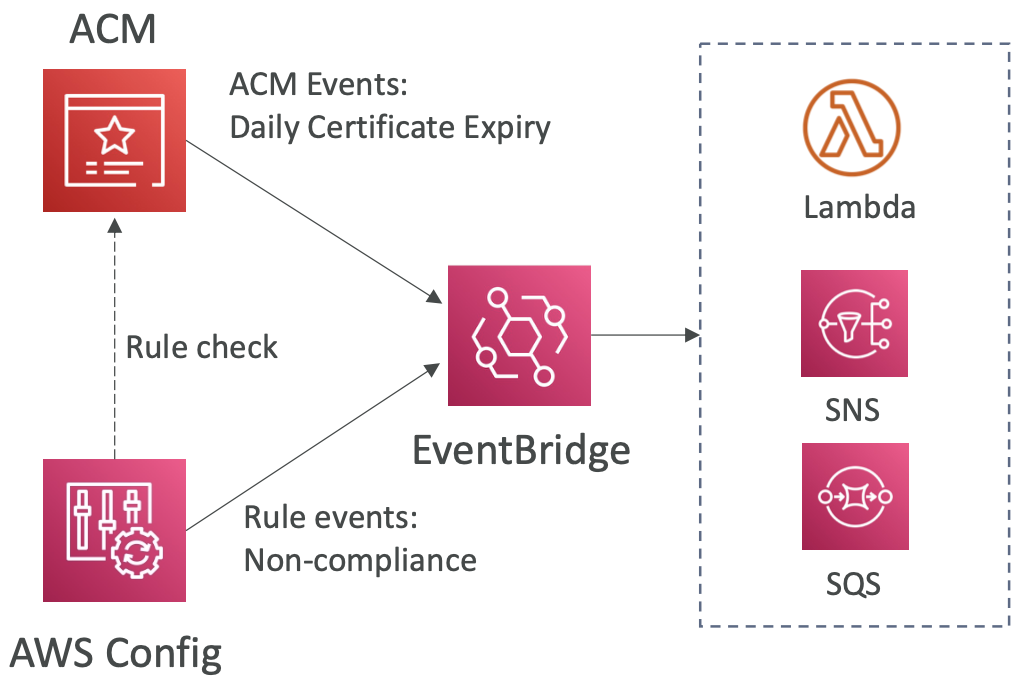

An SSL certificate allows in-flight encryption - traffic between clients and load balancer is encrypted. They have an expiration date (that you set) and must be renewed. Public SSL certificates are issued by Certificate Authorities (CA) like GoDaddy, GlobalSign etc.

TLS certificates are actually used in practice, but the name SSL has stuck.

- SSL = Secure Sockets Layer

- TLS = Transport Layer Security (a newer version of SSL)

The load balancer uses a default X.509 certificate, but you can upload your own. AWS Certificate Manager (ACM) allows you to manage these certificates.

6.3.2. SNI

Clients can use Server Name Indication (SNI) to specify the hostname they reach.

SNI solves the problem of loading multiple SSL certificates on one web server. We may have a single load balancer serving two websites: www.example.com and www.company.com

Each of these websites has an SSL certificate uploaded to the load balancer. When a client request comes in, it indicates which website it wants to reach and the load balancer will use the corresponding SSL certificate.

This works for ALB, NLB or CloudFront.

ELB UI -> Select a load balancer -> Add a listener -> Select the default SSL/TLS certificate6.4. Auto Scaling Groups (ASG)

6.4.1. What is an ASG?

The goal of an ASG is to scale out/in (add/remove EC2 instances) to match load. It ensures we have a minimum and maximum number of instances running.

If running an ASG connected to a load balancer, any EC2 instances created will be part of that load balancer.

ASG is free, you only pay for the underlying EC2 instances.

You need to create a ASG Launch Template.

- AMI and instance type

- EC2 user data

- EBS volumes

- Security groups

- SSH key pair

- IAM roles for EC2 instances

- Network and subnet information

- Load balancer information

The ASG has a minimum, maximum and initial size as well as a scaling policy.

It is possible to scale the ASG based on CloudWatch alarms.

6.4.2. Scaling Policies

Dynamic scaling:

- Target tracking scaling. Keep a certain metric, e.g. ASG CPU, to stay at 40%

- Simple / step scaling. When a CloudWatch alarm is triggered, e.g. CPU > 70%, add 2 units.

Scheduled scaling:

- Anticipate scaling based on known usage patterns. E.g. market open.

Predictive scaling:

- Continuously forecast load and schedule scaling accordingly.

Good metrics to scale on:

- CPUUtilization

- RequestCountPerTarget

- Average Network In/Out

- Application-specific metrics

6.4.3. Scaling Cooldown

After a scaling activity happens, there is a cooldown period (default 300 seconds) where the ASG will not launch or remove any more instances while it waits for metrics to stabilise.

Using a ready-to-use AMI means the EC2 instances start quicker, allowing you to use a shorter cooldown and be more reactive.

7. Relational Databases

7.1. RDS

7.1.1 What is Relational Database Service?

Relational Database Service (RDS) is a managed DB using SQL as a query language.

Supported database engines: Postgres, MySQL, MariaDB, Oracle, Microsoft SQL Server, IBM DB2, Aurora (AWS proprietary database).

Instead of RDS, we could run our own EC2 instance with a database inside. The benefit of RDS is that it is a managed service, so you get:

- Automated provisioning and OS patching

- Continuous backups and point-in-time restore

- Monitoring dashboards

- Read replicas

- Multi-AZ setup for disaster recovery

- Maintenance windows for upgrades

- Horizontal and vertical scaling capabilities

- Storage backed by EBS

But the downside is you can’t SSH into the underlying instances.

7.1.2. Storage Auto-Scaling

RDS will increase your DB instance automatically as you run out of free space. You set a Maximum Storage Threshold.

This will automatically modify storage if:

- Free storage is less than 10%

- Low-storage lasts at least 5 mins

- 6 hours have passed since the last notification

7.1.3. Read Replicas

Read replicas allow better read scalability. Read replicas are obviously read-only, so only support SELECT statements.

We can create up to 15 read replicas. They can be within AZ, cross-AZ or cross-region. The replication is asynchronous so they are eventually consistent. Replicas can be promoted to their own DB.

Applications must update their connection string to use the read replicas.

Use cases may be if you have an existing production application, and now you want to add a reporting application without affecting performance of the existing process.

Network costs:

- If the read replicas are in the same region, there is no network costs for the data transfer.

- There is a network cost for cross-region read replicas.

7.1.4. Multi-AZ

This is typically for disaster recovery.

This is a synchronous replication.

There is one DNS name, and the application will automatically failover to the standby database if the master database goes down. No manual intervention is required. This increases availability.

Aside from the disaster case, no traffic is normally routed to the standby database. It is only for failovers, not scaling.

You can set up read replicas as multi-AZ for disaster recovery.

Single-AZ to multi-AZ is a zero downtime operation, the DB does not stop. We just click “modify” on the database.

Internally, what happens is: a snapshot it taken, a new DB is restored from this snapshot in a new AZ, synchronisation is established between the two databases.

7.1.5. RDS Custom

This is a managed Oracle and Microsoft SQL Server database with full admin access for OS and database customisation. Usually these are managed by RDS.

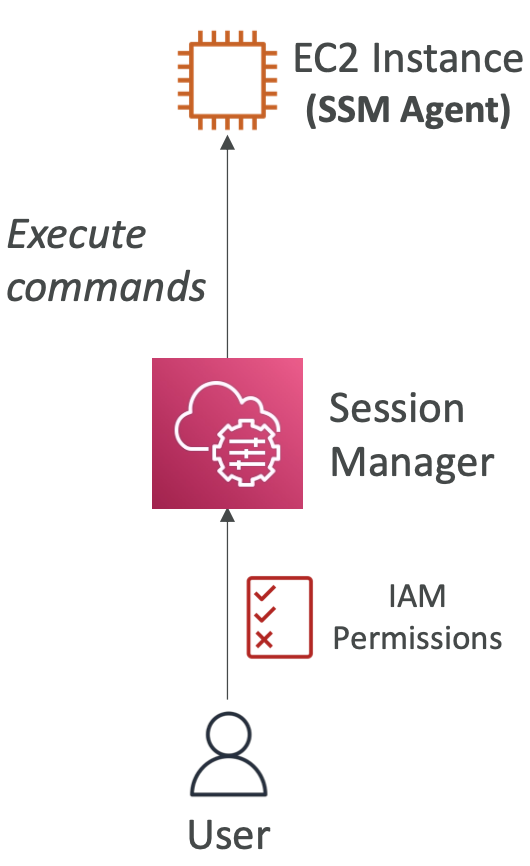

It allows us to configure the OS and settings, and access the underlying EC2 instance using SSH or SSM Session Manager.

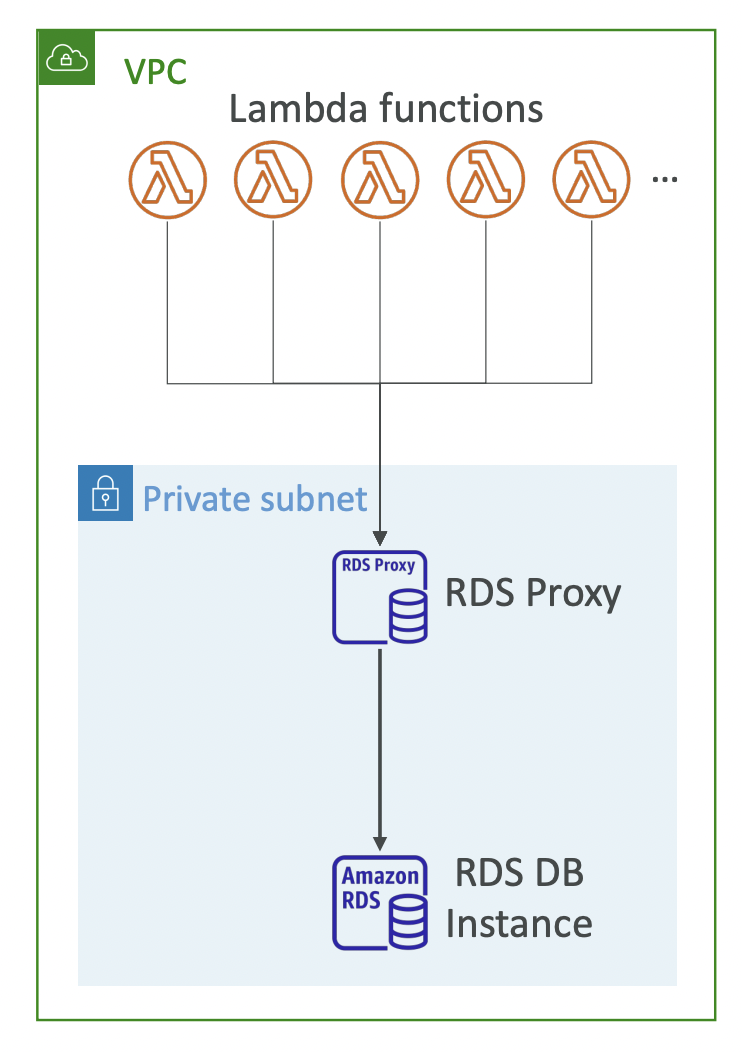

7.1.6. RDS Proxy

An RDS Proxy pools and shares incoming connections together resulting in fewer connections to the database. Think of it like a load balancer for the database.

This is useful when you have multiples instances scaling in and out that might connect to your database then disappear and leave lingering connections open. For example, when using Lambda functions.

It is serverless and supports autoscaling. It reduces failover time by up to 66%. It supports both RDS and Aurora, including most flavours of SQL.

No code changes are required for most apps, just point the connection details to the proxy rather than the database directly. Authentication is via IAM using credentials stored in AWS Secrets Manager. The RDS Proxy can only be accessed from inside the VPC, it is never publicly accessible.

7.2. Amazon Aurora

7.2.1. What is Aurora?

Aurora is a proprietary database from AWS with compatibility with Postgres and MySQL.

Aurora is “cloud-optimised” with faster read/write performance and less lag when creating read replicas. Storage grows automatically. Failover is instantaneous.

It stores 6 copies of your data across 3 AZ: 4 out of 6 copies are needed for writes, and 3 out of 6 for reads.

Storage is striped across hundreds of volumes. There is self-healing with peer-to-peer replication.

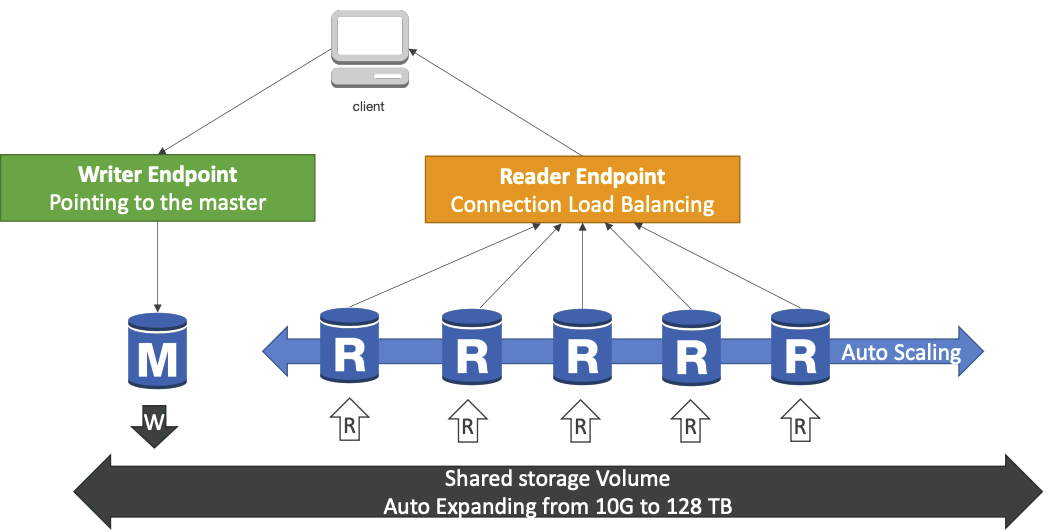

- One master Aurora instance takes writes. There is automated failover within 30 seconds if the master instance goes down.

- Master + up to 15 read replicas serve reads. You can set up auto-scaling for read replicas. There is support for cross-region replication.

The aurora DB cluster. You don’t interact with any instance directly; they can scale and be removed so the connection URL would be constantly changing. Instead there is a writer endpoint that always points to the master instance. There is a read endpoint which points to a load balancer that directs your query to a read replica.

7.2.2. Advanced Concepts

Auto scaling. Read replicas scale based on CPU usage or number of connections breaching a user-defined threshold.

Custom endpoint. Define a subset of the read replicas as a custom endpoint. This means we can route traffic for jobs that we know are database-intensive, like analytical queries, to a subset of the instances without affecting the performance on the other read replicas.

Aurora serverless. Automated database instantiation and auto-scaling based on actual usage.

Good for infrequent, intermittent or unpredictable workloads. No capacity planning needed, you pay per second of usage.

The client connects to a proxy fleet, which is like a load balancer that directs requests to Aurora instances that are scaled in the background.

Global Aurora. Cross-region replicas are useful for disaster recovery. Aurora Global Database is the recommended approach.

You create 1 primary regions for read/write. You can then have up to 5 secondary read-only regions. Replication lag is <1 second. Up to 16 read replicas per secondary region.

Promoting another region in the event of disaster recovery has a Recovery Time Objective (RTO) < 1 minute.

Aurora Machine Learning. Add ML-based predictions to your application via SQL. Supported on SageMaker or Amazon Comprehend.

Babelfish for Aurora PostgreSQL. Babelfish allows Aurora PostgreSQL to understand commands targeted for Microsoft SQL Server (written in T-SQL). It automatically translates between these flavours of SQL to make migration easier.

7.3. Backups and Monitoring

7.3.1. RDS

There are automated backups:

- Full backup daily during the backup window.

- Transaction logs backed up every 5 mins. This gives the ability to do a point-in-time restore.

- 1-35 days of retention. Can be disabled by setting to 0.

Manual DB snapshots are triggered by the user and can be retained as long as you want.

A use case for this is for an infrequently used database. A stopped RDS database will still incur storage costs. If you intend to stop it for a long time, you can snapshot it then restore it later.

7.3.2. Aurora

Automate backups are retained for 1-35 days. Cannot be disabled. Point-in-time recovery for any point in that timeframe.

Manual DB snapshots. Triggered by user and retained for as long as you want.

7.3.3. Restore Options

- Restore an RDS / Aurora backup or snapshot to create a new database.

- Restore a MySQL RDS database from S3. Create a backup of your on-premises database, store it in S3, the restore the backup file on to a new instance running MySQL.

- Restore a MySQL Aurora cluster from S3. Same as for RDS, except the on-premises backup must be created using Percona XtraBackup.

7.3.4 Aurora Database Cloning

Create a new Aurora DB cluster from an existing one. An example use case is cloning a production database into dev and staging.

It is faster than doing a snapshot+restore. It uses the copy-on-write protocol. Initially the clone uses the same data volume as the original cluster, then when updates are made to the cloned DB cluster additional storage is allocated and data is copied to be separated.

7.4. Encryption

Applies to both RDS and Aurora.

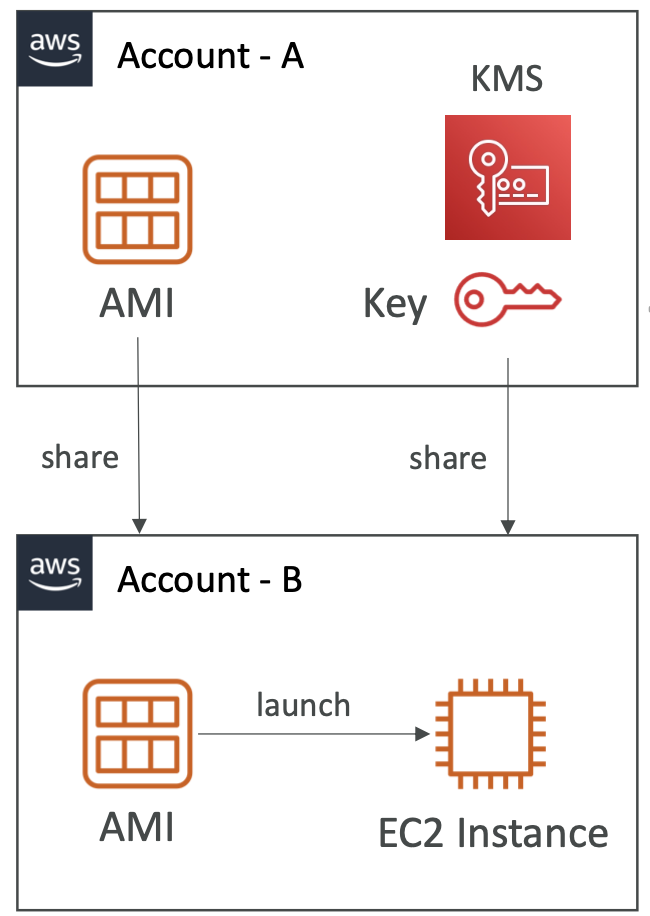

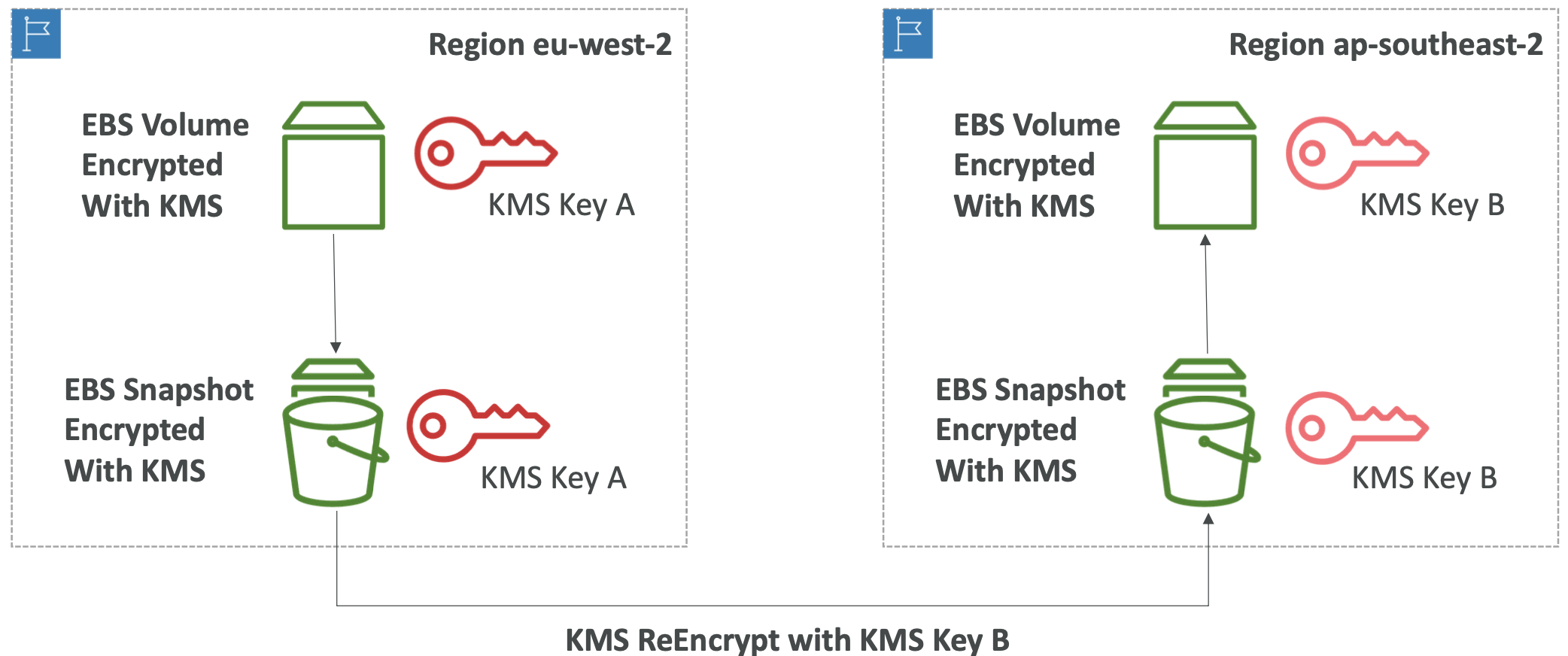

At rest encryption. Database master and read replicas are encrypted using AWS KMS. This must be defined at launch time. If master is not encrypted then the read replicas cannot be encrypted. If you want to encrypt and unencrypted database, you need to take a snapshot of it and restore a new database with encryption set up at launch time.

In flight encryption. RDS and Aurora are TLS-ready by default. Applications must use the provided AWS TLS root certificates on the client side.

Authentication can be via IAM or by the standard username/password used to connect to databases. Security groups can also be used to control access. Audit logs can be enabled and sent to CloudWatch Logs for longer retention.

7.5. ElastiCache

7.5.1. What is ElastiCache?

ElastiCache is a managed Redis or Memcached. Analogous to how RDS is a managed SQL database. It is managed, meaning AWS takes care of OS maintenance, configuration, monitoring, failure recovery, backups, etc.

Caches are in-memory databases with low latency. They reduce the load on your database for read-intensive workloads.

This can help make your application stateless. For example, when the user logs in, their session is written to the cache. If their workload is moved to another instance, their session can be retrieved from the cache.

It does, however, require significant changes to your application’s code. Instead of querying the database directly, we need to:

- Query the cache. If we get a cache hit, use that result.

- If we get a cache miss, read from the database directly.

- Then write that result to the cache ready for the next query.

We also need to define a cache invalidation strategy to ensure the data in the cache is not stale.

7.5.2. Redis vs Memcached

Redis replicates whereas Memcached shards.

Redis:

- Multi-AZ with auto-failover

- Read replicas to scale for high availability

- AOF persistence

- Backup and restore features

- Supports sets and sorted sets. Sorted sets allow for things like real-time leaderboards

Memcached:

- Multi-node for partitioning (sharding) data

- No replication (therefore not high availability)

- Not persistent

- Backup and restore available for the serverless option only

- Multi-threaded architecture

7.5.3. ElastiCache Security

ElastiCache supports IAM authentication for Redis. IAM policies on ElastiCache are only used for AWS API-level security.

For Memcached, it needs to be username/password. Memcached supports SASL-based authentication.

With Redis AUTH you can set a password/token when you create a cluster, which provides an extra level of security on top of security groups. It supports SSL in-flight encryption.

Common patterns for ElastiCache.

- Lazy loading - All the read data is cached, but data in the cache may become stale.

- Write through - Data is inserted/updated in the cache any time it is written to the DB. Ensures no stale data.

- Session store - Using the cache to store temporary session data, and using TTL to determine cache validation.

7.5.4. Common Port Numbers

Useful port numbers to know:

- 21 - FTP

- 22 - SFTP, SSH

- 80 - HTTP

- 443 - HTTPS

Common database ports:

- 5432 - PostgreSQL, Aurora

- 3306 - MySQL, MariaDB, Aurora

- 1433 - MySQL Server

- 1521 - Oracle RDS

8. Route 53

8.1. DNS

8.1.1. What is DNS?

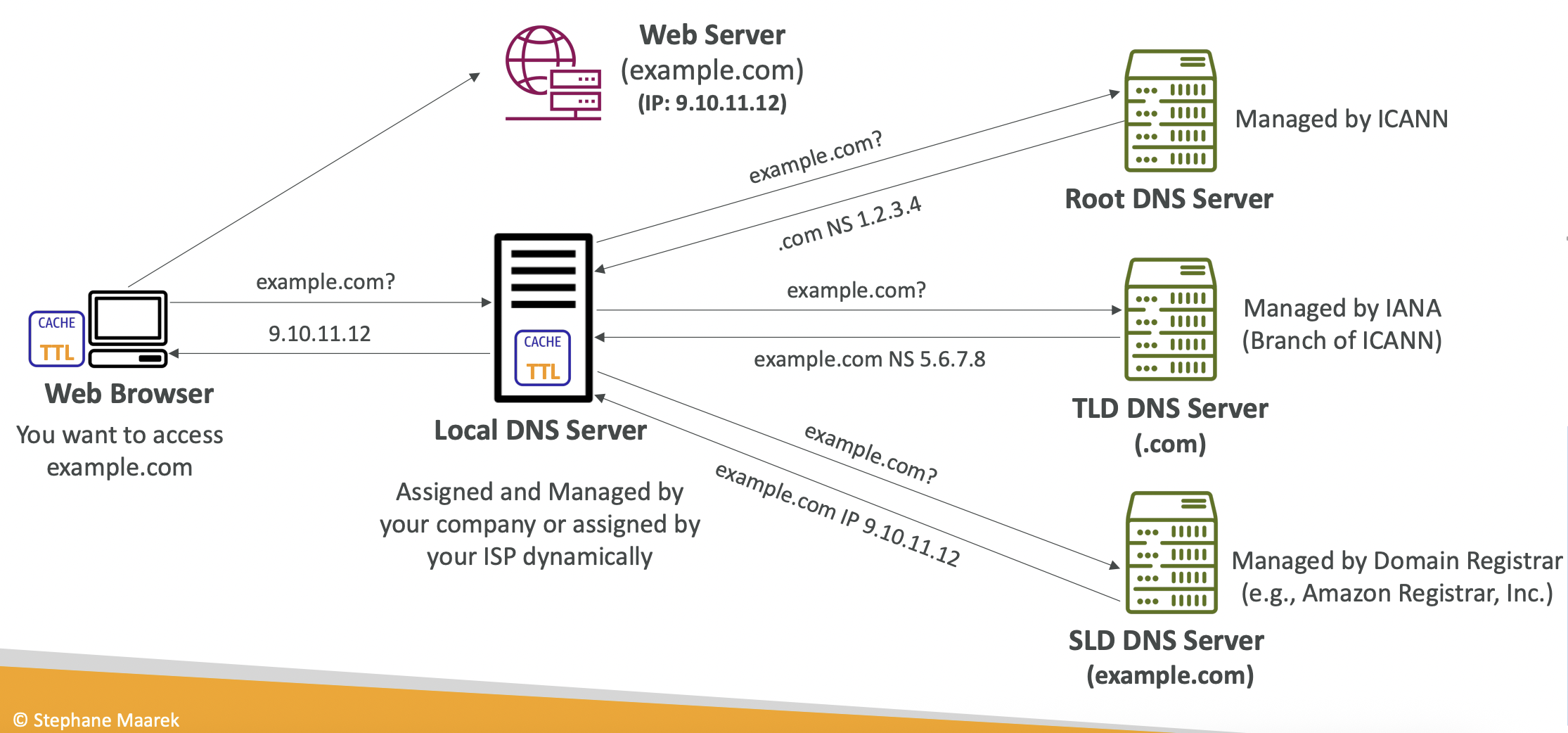

Domain Name System (DNS) translates human-friendly hostnames into the machine-friendly IP address. E.g. www.google.com -> 172.217.18.36

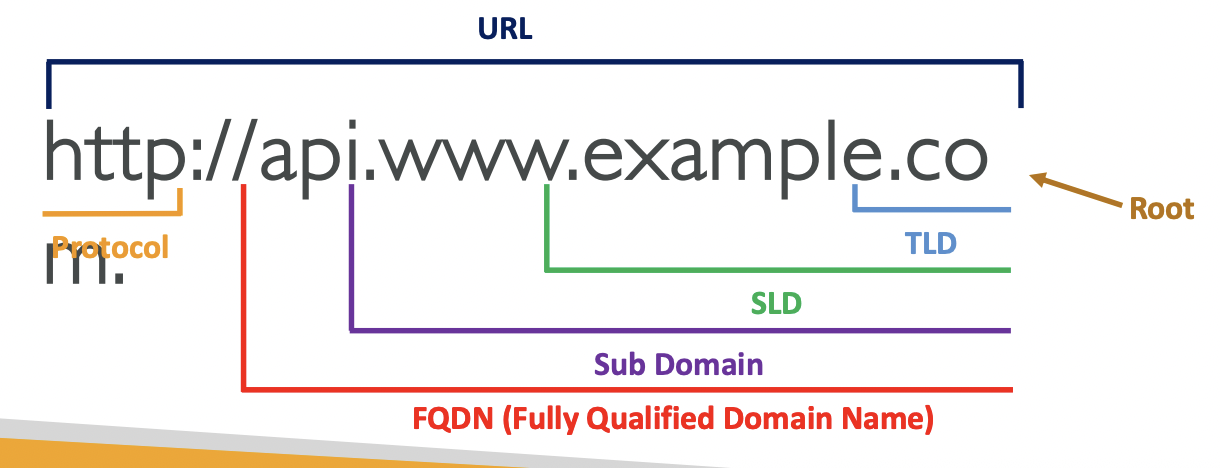

There is a hierarchical naming structure separated by ., e.g.

www.example.com

api.example.comTerminology:

- Domain registrar: Amazon Route 53, GoDaddy

- DNS Records: A, AAAA, CNAME

- Zone file: contains DNS records

- Name server: Server that resolves DNS queries

- Top-Level Domain (TLD): .com, .gov, .org

- Second-Level Domain (SLD): google.com, amazon.com

8.1.2. How DNS Works

Your web browser sends a request for www.example.com to a Local DNS Server managed by your company or ISP. This routes to a Root DNS Server managed by ICANN, which resolves the top-level domain (.com) and gives the corresponding IP address for that part. The browser then sends a request to the TLD DNS Server managed by ICANN which resolves the second-level domain. The browser then sends a request to the SLD DNS Server managed by Amazon Registrar etc, and that gives the IP address of the requested website.

8.2. Route 53

Route 53 is a fully managed authoritative DNS and Domain Regstrar. You can also check the health of your resources. Authoritative means the customer (you) can update the DNS records.

“53” is a reference to the traditional DNS port.

8.2.1. Records

You define records which define how you want to route traffic for a domain. Each record contains:

- Domain/subdomain name - example.com

- Record type - A/AAAA/CNAME/NS

- Value - 12.34.56.78

- Routing policy - how Route53 responds to queries

- TTL - how long this record is cached at DNS resolvers

Record types:

- A - maps a hostname to IPv4

- AAAA - maps a hostname to IPv6

- CNAME - maps a hostname to another hostname. The target is a domain which must have an A or AAAA record.

- NS - Name servers for the hosted zone. These are the DNS names/IP addresses for the servers that can respond to queries for your hosted zone.

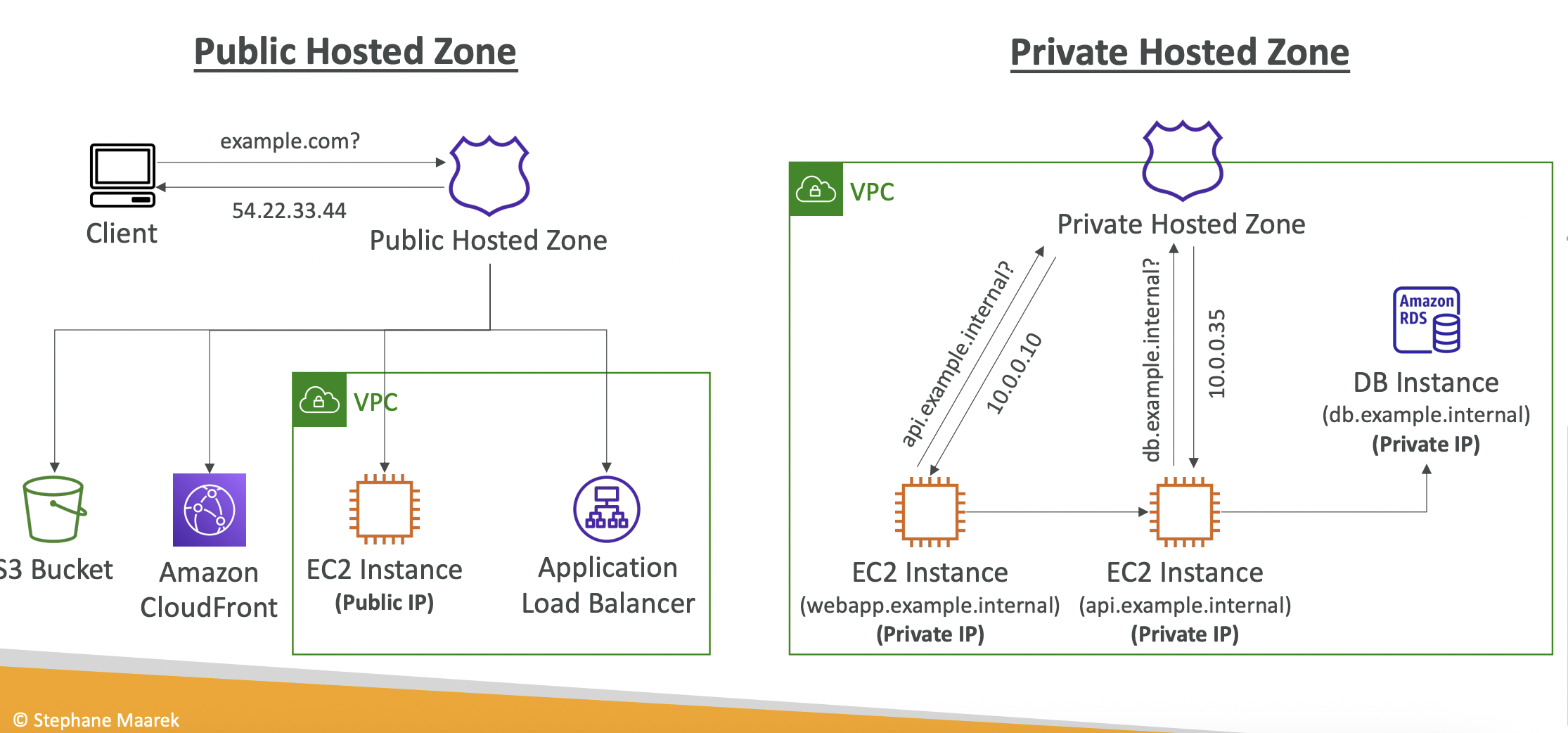

8.2.2. Hosted Zones

A hosted zone is a container for records that define how traffic is routed to a domain and its subdomains.

- Public hosted zones - contain records specifying how to route traffic on the internet (i.e. public domain names)

- Private hosted zones - contain records specifying how to route traffic in your VPC (i.e. private domain names)

It costs $0.50 per month per hosted zone.

Route 53 UI -> Domains -> Registered Domains

-> Choose a domain name -> Choose duration, auto renew

-> Checkout -> Contact infoNow in Hosted Zones you will see the DNS records created for your domain.

8.2.3. Creating a Record

Route 53 -> Hosted Zones -> Create RecordSpecify the record details discussed previously.

We can take the IP addresses from our EC2 instances, load balancers, etc and route to these as we wish.

8.2.4. TTL

Time To Live (TTL) on a record tells the client how long it should cache the record (i.e. the IP address) before requesting it again. TTL is mandatory for all records except Alias records.

A high TTL (e.g. 24 hours) results in less traffic to Route 53, but clients might possibly have outdated records. A low TTL results in more traffic to Route 53 (and therefore higher costs) but records are more up to date and so easier to change.

A common strategy when you know you are changing your DNS record soon is to temporarily lower the TTL close to the switchover.

8.2.5. CNAME vs Alias Records

Many AWS resources, like ELB and CloudFront, expose an AWS hostname, e.g. blabla.us-east-1.elb.amazonaws.com and we want to map it to myapp.domain.com.

CNAME records allow a hostname to point to any other hostname, but only for a non-root domain.

Alias records point a host name to an AWS resource, and this works for root domains and non-root domains. It is free and has a native health check. It automatically recognises changes in the resource’s IP address. It is always of type A or AAAA, i.e. IPv4 or IPv6. AWS sets the TTL so you can’t set this manually.

Valid Alias record targets: ELB, CloudFront Distributions, API Gateway, Elastic Beanstalk environments, S3 Websites (not buckets), VPC Interface Endpoints, Global Accelerator, Route 53 record in the same hosted zone.

You cannot set an Alias record for an EC2 DNS name.

8.3. Routing Policies

Routing policies define how Route 53 responds to DNS queries.

It isn’t “routing” in the sense of a load balancer; DNS does not route any traffic, it just responds to DNS queries.

Route 53 supports several routing policies:

Simple. Route traffic to a single resource. We can specify multiple values for the same record. The client will pick one of the IP addresses at random. Cannot use with health checks.

Weighted. Control the percentage of requests that go to each resource. We assign each record a relative weight; the DNS records must have the same name and type. Can be used with health checks.

Latency-based. Redirect to the resource with the lowest latency, based on traffic between users and AWS. Can be associated with health checks.

Failover. There is a health checker associated with the primary instance. If this passes, Route 53 returns its IP address to route traffic to it. If unhealthy, it fails over to another instance and returns that IP address.

Geolocation. Routing is based on the user’s location. This is subtly different from the latency-based policy. We can specify location by continent, country or US state. There should be a “default” record in case of no match. Can be used with health checks.

Geo-proximity. Route traffic to your resources based on the geographic location of users and resources. It is like a continuous equivalent of the discrete binning of the geolocation policy. We shift more traffic to resources based on the defined bias - a value between -99 to 99. Resources can be AWS resources (specify AWS region) or non-AWS resources (specify latitude and longitude).

IP-based routing. Routing is based of the clients’ IP addresses. You provide a list of CIDRs for your clients and the corresponding endpoints/locations. These are user-IP-to-endpoint mappings. An example is you route users from a particular ISP to a specify endpoint.

Multi-value. Used when routing traffic to multiple resources; Route 53 returns multiple values. Can be associated with health checks and will only return IP addresses of healthy instances.

It is not a replacement for an ELB. It doesn’t necessarily distribute load evenly, it just gives clients more options and lets them choose.

8.4 Health Checks

HTTP Health Checks are for public resources. We can use them to get automatic DNS failover.

We can use health checks to:

- Monitor resources

- Monitor other health checks. This is called a Calculated Health Check. We can use OR, AND or NOT logic to combine the results of multiple health checks, or specify at least N checks must pass.

- Monitor CloudWatch Alarms. This gives us a workaround to use them for private resources. The health checkers are outside the VPC so cannot access the endpoint directly. Create a CloudWatch Metric with and associated CloudWatch Alarm, and the health checker monitors the alarm.

Route 53 UI -> Health Checks -> Create health checkThere are 15 global health checkers in different regions. They will periodically send HTTP requests to /health and if >18% of them receive a 2xx or 3xx status code the endpoint is healthy. The health checker can use the text in the first 5120 bytes of the response.

You can customise the range of regions to use. You must configure your resource to allow incoming requests from the Route 53 health checker IP range.

8.5. Domain Registrar vs DNS Service

You buy/register your domain name with a Domain Registrar by paying an annual fee. The Domain Registrar usually provides you with a DNS service to manage your DNS records. Examples of domain registars are GoDaddy, Amazon Registrar.

But you don’t have to stick with the same service provider. You could buy a domain from GoDaddy and use Route53 to manage your DNS records. You can create a hosted zone in Route 53 and specify the custom Nameservers to do this.

9. Solutions Architect

9.1. Instantiating Applications Quickly

EC2 instances, RDS databases and other resources take time to boot up. Some common patterns to speed up boot time:

EC2 instances. Use a golden AMI which already has your applications and dependencies installed. Launch your instance from this AMI. You may have some user data or other dynamic data. Use a bootstrap script for these. A hybrid approach is to put as much static logic as possible into the golden AMI and keep the bootstrap script lean.

RDS databases. Restore from a snapshot to quickly recover/resume without having to do lots of slow inserts.

EBS volumes. Restore from a snapshot. The disk will already be formatted and have the correct data.

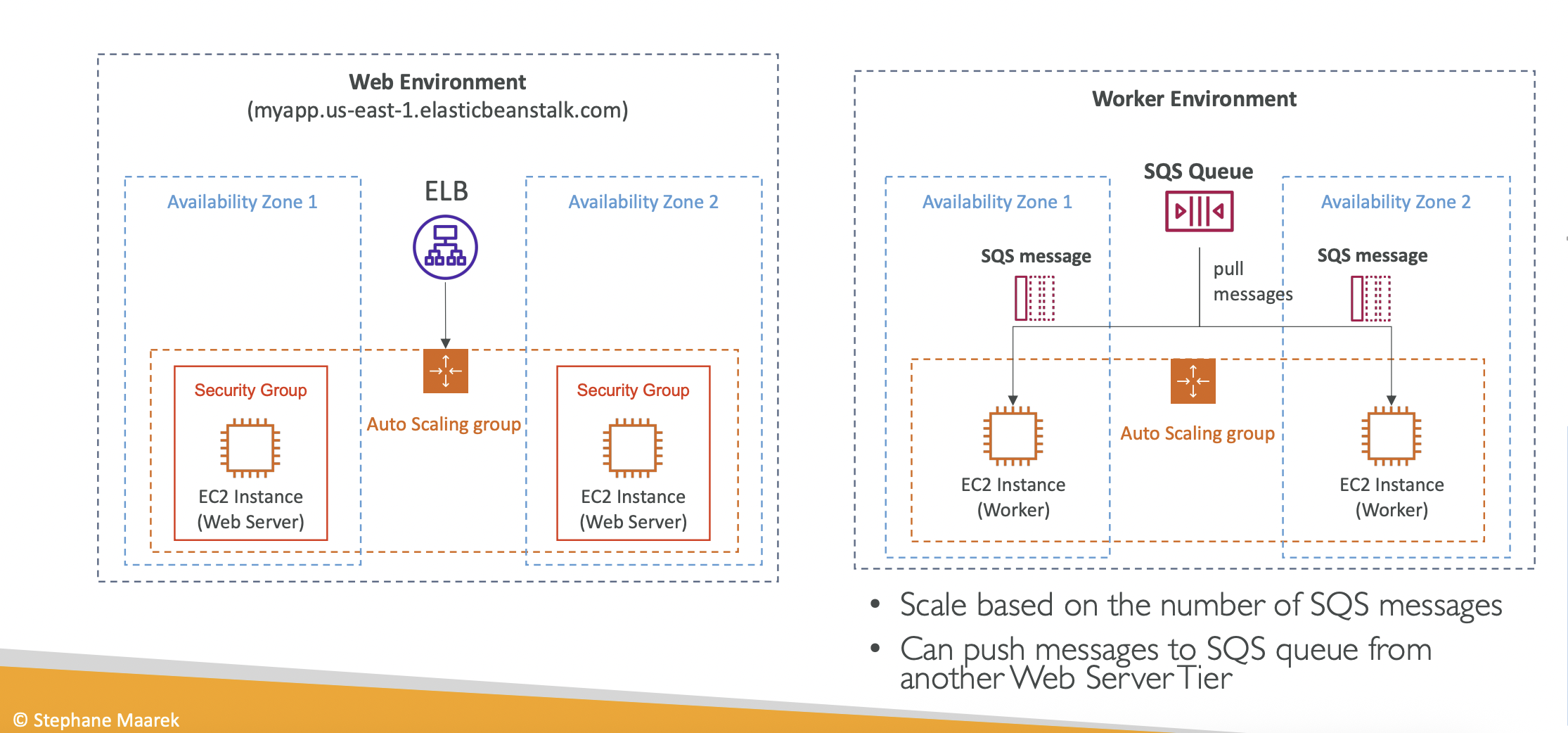

9.2. Elastic Beanstalk

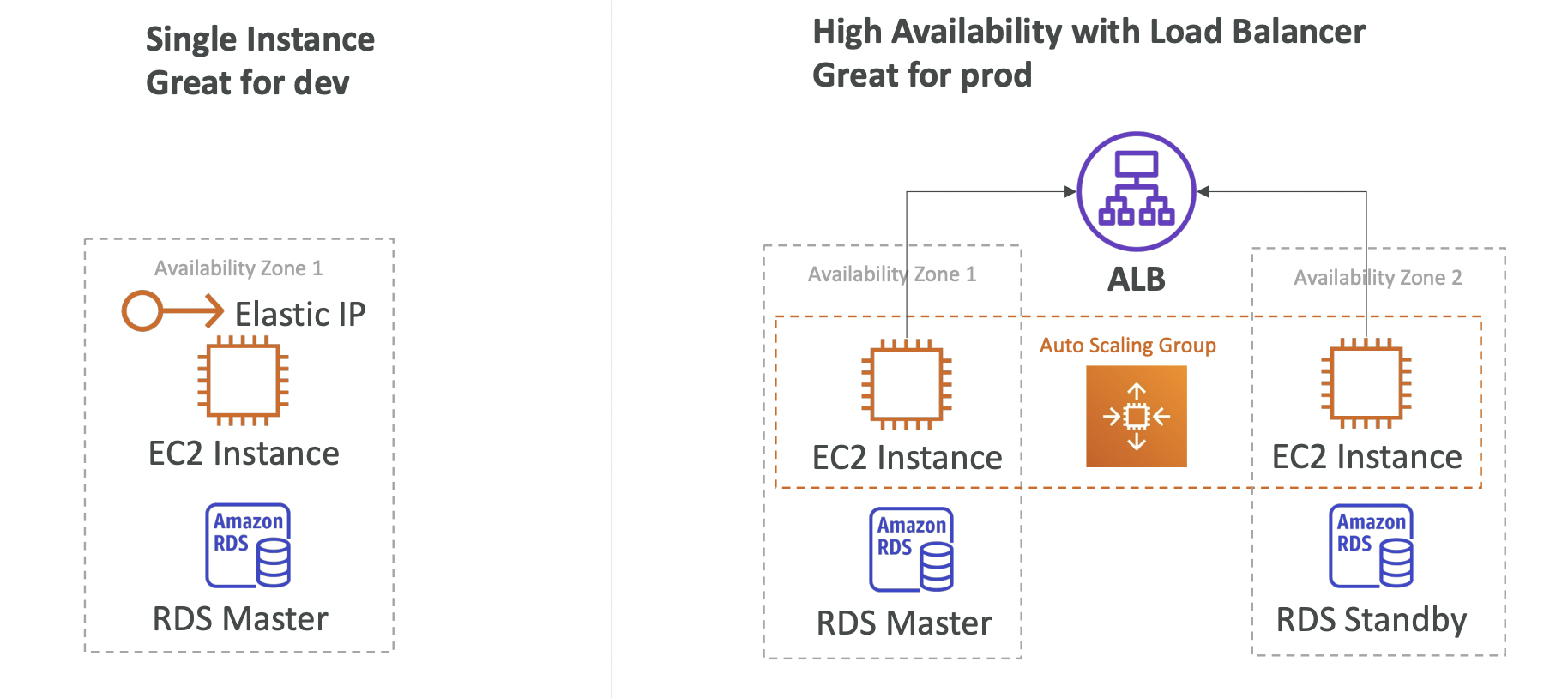

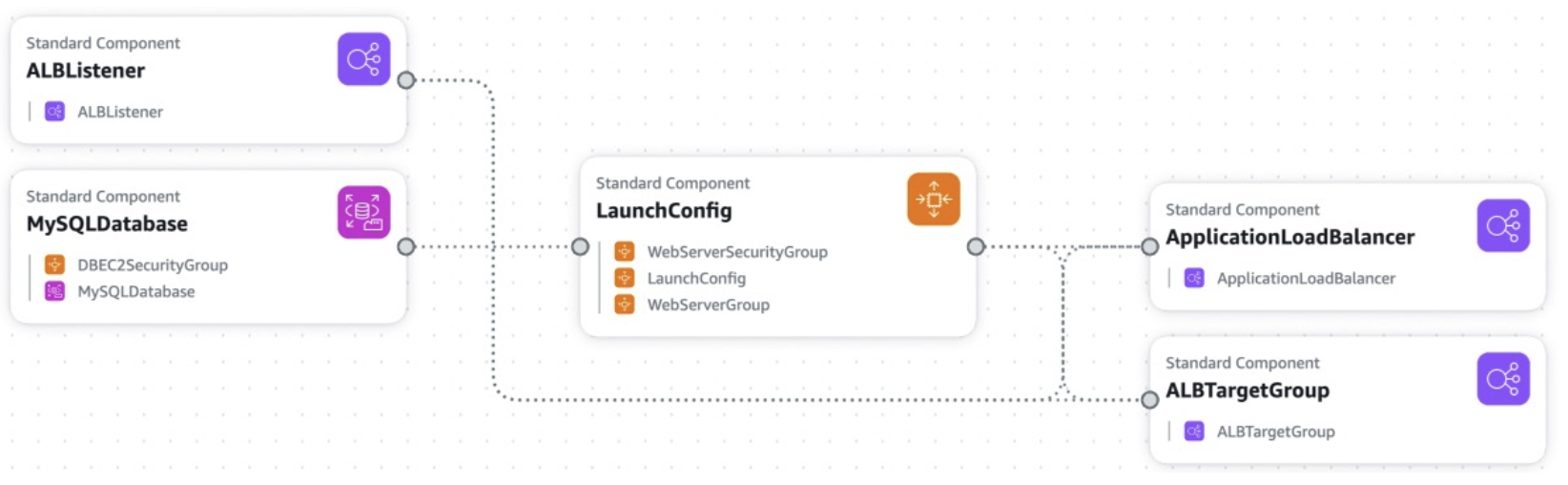

Many applications will have the same architecture: an ALB with an ASG scaling out the EC2 instances with an RDS database.

The pain points are around managing infrastructure and configuring all of the services each time. Ideally, we would have a single way of doing this rather than repeating the same steps every time.

Elastic Beanstalk is a managed service to handle all of this. It automatically handles scaling, load balancing, health monitoring, configuration. You still have control to configure the resources.

Beanstalk is free, but you pay for the underlying resources (EC2, RDS, ALB, etc).

Components:

- Application - a collection of Elastic Beanstalk components (environments, versions, configurations)

- Application Version - an iteration of your application code

- Environment - a collection of AWS resources running an application version. You can create multiple environments for dev, staging, prod, etc. There are web server and worker environment tiers

Create application -> Upload version -> Launch environment -> Manage environment (upload another version to update it)Beanstalk supports a lot of languages.

9.3. Web Server Environment vs Worker Environment

9.4. Deployment Modes

Single instance vs high availability

10. S3

## 10.1 S3 Objects and Buckets Simple Storage Service (S3) is used for backup and storage, disaster recovery, archive, hybrid cloud storage, application hosting, media hosting, data lakes and analytics, software delivery, static website.

S3 allows people to store objects (files) in buckets (directories). Bucket names must be unique globally (across all regions and all accounts) even though S3 is a region-specific service.

Buckets. Naming convention: 3-63 characters long, no uppercase or underscore, not an IP address, must start with lowercase letter or number and cannot start with xn–, must not end with -s3alias.

Objects. Objects (files) have a key which is the full path. The key is composed of prefix+object name. S3 does not have a concept of directories, objects are just files with really long keys containing slashes. The UI is misleading as it splits the buckets to look like directories for convenience/familiarity. The max object size is 5TB. Uploads of >5GB must use “multi-part upload”.

Metadata. Objects can contain metadata, a list of key/value pairs for system or user metadata. They can contain tags, up to 10 key/value pairs for security/lifecycle.

10.2. Security

Can be:

- User-based. IAM policies

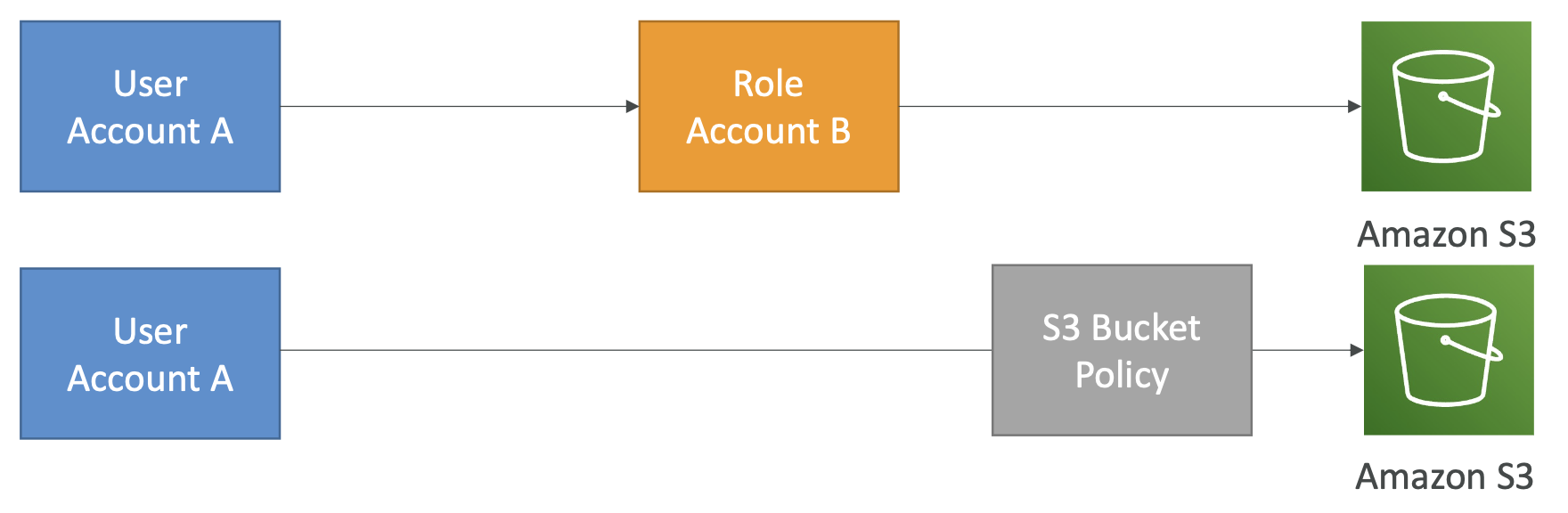

- Resource-based. Bucket policies (allows cross-account access), Object Access Control List (ACL) for finer grained control, Bucket ACL.

Use bucket policies to grant public access, grant cross-account access, or force encryption for objects at upload. We can use bucket settings or account settings to block public access, if we know that nothing should ever be public so we want to make sure nobody accidentally sets a policy that is too open.

The policies are OR based - a user can access a resource if either the IAM policy or the resource policy allows it, as long as there is not a specific deny.

Objects can be encrypted within S3 as an extra layer of security.

Policies are defined with a JSON. Important keys are:

- Resources: buckets and objects that this policy applies to

- Effect: allow or deny

- Actions: the API actions to allow or deny, like GetObject, ListObjects etc

- Principal: the account/user to apply the policy to

10.3. S3 Website

S3 can host static websites.

We must enable public read access on the bucket, otherwise users will get 403 errors.

10.4. S3 Versioning

You can version files in S3. If we upload a file with the same key, it will increment the version number.

This needs to be enabled at the bucket-level. It is best practice to use versioning for backup and roll back.

- Enabling versioning on an existing bucket will result in

version=nullfor existing objects. - Removing versioning on a bucket will not delete the previous versions on existing objects.

We can delete the newest version if we want to roll back to the previous version.

When we delete an object, we are actually updating it with a “delete marker” version. We can then rollback the delete marker to recover our file.

10.5. Replication

Cross-Region Replication (CRR) and Same-Region Replication (SRR) are used to asynchronously copy data from one bucket to another. They can be in different accounts.

Versioning must be enabled in source and destination buckets, and S3 must have the required IAM permissions.

Use cases:

- CRR. Compliance, lower latency access

- SRR. Log aggregation, sync dev vs prod environments

After you enable replication, only new objects are replicated. To also replicate the history, use S3 Batch Replication. Deletes with delete markers are replicated, but unversioned “permanent” deletes are not replicated to avoid replicating malicious deletes.

You cannot chain replication from Bucket A -> B -> C.

10.6. S3 Storage Classes

Durability represents how often you will lose an object in storage. S3 has 11 9s durability, so if you store 10 million objects you will lose a single object on average once every 10000 years.

This is the same for all storage classes.

Availability is how the uptime of a service. This varies depending on the storage class.

Storage classes:

- S3 Standard: general purpose, infrequent access, one zone infrequent access

- S3 Glacier: instance retrieval, flexible retrieval, deep archive

- S3 Intelligent Tiering: automatically move objects between tiers based on lifecycle policies.

10.7. Lifecycle Rules

You can transition objects between storage classes. This can be done manually, or automated through lifecycle rules.

- Transition actions. Configure objects to transition to another storage class. E.g. standard-IA 60 days after creation or glacier for archiving after 6 months.

- Expiration actions. Configure objects to expire (delete) after a specified period. Can be used to delete old versions of files, called “non-current versions”, if versioning is enabled. Can also be used to delete incomplete multi-part uploads after a certain time has elapsed.

Rules can be created for a certain prefix, or for certain tags.

Amazon S3 Analytics provides storage classes analysis. It gives daily reports with recommendations for transition actions for standard and standard-IA storage classes.

10.8. S3 Requester Pays

Generally, the owner of the bucket pays for storage and data transfer costs.

With Requester Pays buckets, the requester pays the data transfer costs associated with their request. The requester must be authenticated in AWS (they cannot be anonymous).

This is useful when sharing large amounts of data with other accounts.

10.9. S3 Event Notifications

An event can be an object being created, removed, restored, replicated.

You can filter on the object names, e.g. *.jpg

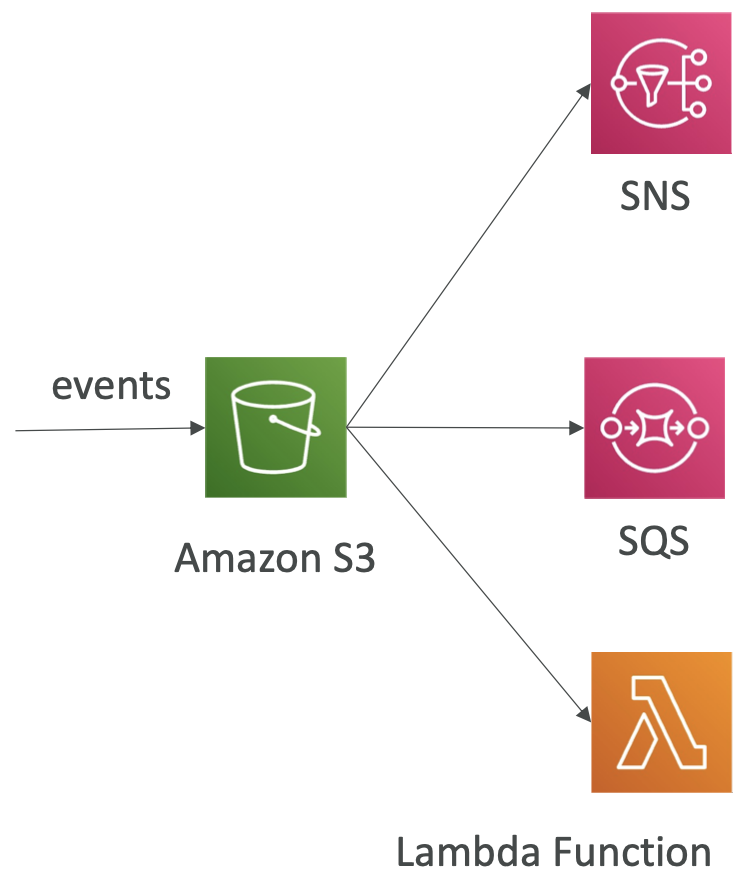

S3 Event Notifications can then be sent to other AWS resources, e.g. EC2, to trigger a downstream workflow. The notifications typically deliver in seconds but can take a minute or more.

The S3 service needs to have the appropriate access policy. To send event notifications to SNS, it needs an SNS Resource Access Policy. For SQS, it needs an SQS resource policy. And for Lambda functions, it needs a Lambda resource policy. Do this in the AWS console for the appropriate service (SNS, SQS, Lambda).

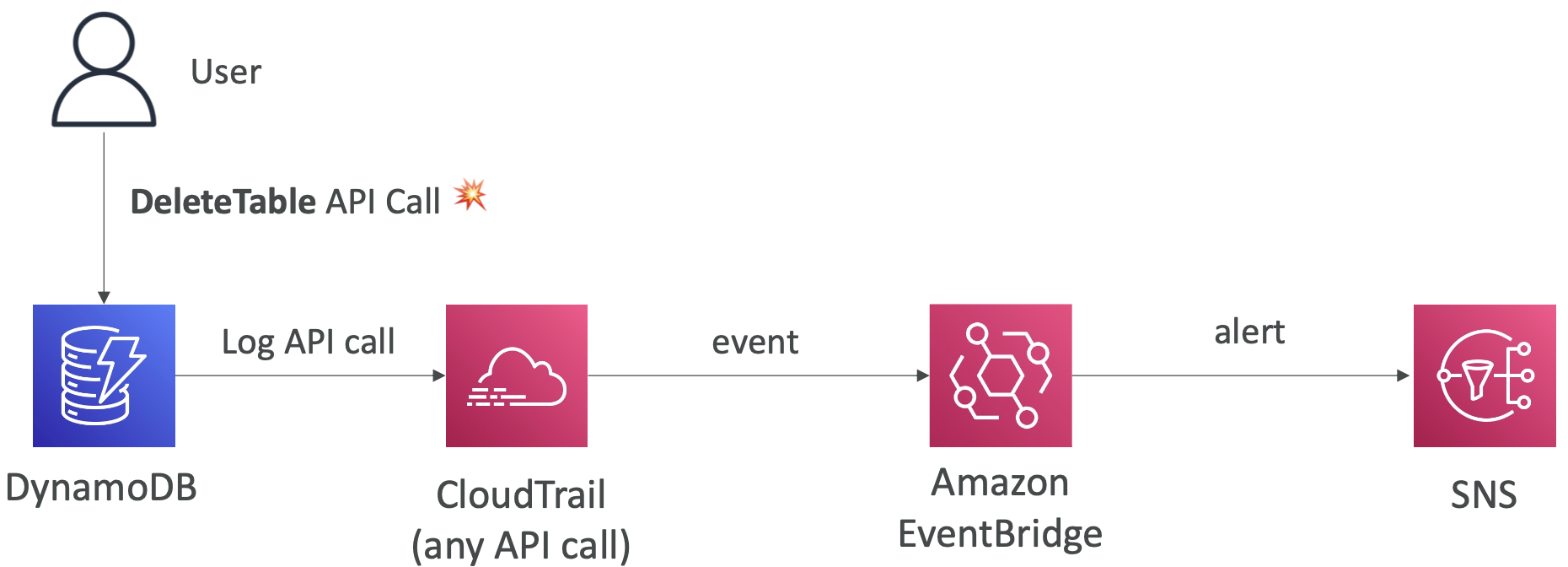

All events, regardless of whether they are going to SNS, SQS, Lambda etc, go via Amazon EventBridge. From here, you can set rules to go to over 18 AWS services as destinations.

EventBridge has filtering options, multiple destinations, and archive and replay capabilities.

10.10 S3 Performance

You can get at least 3500 PUT, COPY, POST, DELETE requests and 5500 GET/HEAD requests per second per prefix in a bucket.

The prefix is everything between the bucket and the file name. Remember S3 isn’t really a file system, it’s an object store, so the prefix is just a long string that happens to have some slashes in, it isn’t actually a hierarchy.

There are no limits to the number of prefixes you can have in a bucket.

Multi-part upload is recommended for files > 100 MB and required for files > 5GB The file is divided into parts and uploaded in parallel.

S3 transfer acceleration increases transfer speed by transferring the file to an AWS edge location and then forwarding it to the target region. So rather than uploading directly to an AZ that is far away, you can upload to your nearest location which is quicker and then transfer between regions using AWS’s private network which is fast. Minimise the time spent on public networks and maximise the time on private networks.

S3 Byte-range fetches parallelises GET requests by requesting specific byte ranges. This also gives better resilience in the case of failures. It can also be used to retrieve partial data, e.g. just the head of a file.

10.11 S3 Batch Operations

Perform bulk operations on existing S3 objects with a single request. A job consists of a list of objects, the action to perform, optional parameters. You can use S3 Inventory to get the object list and use Athena to filter the list.

S3 Batch Operations will manage retries, progress tracking, completion notifications and generate reports.

Common use cases: modify object metadata, copy objects between buckets, encrypt objects, modify tags, restore objects from S3 Glacier, invoke a Lambda function to perform custom actions on each object.

10.12 S3 Storage Lens

Analyse storage across the entire organisation. It can identify anomalies and cost efficiencies.

It aggregates data for an organisation, or specific accounts, regions, buckets or prefixes.

You can use this to create a dashboard or export metrics as a CSV file to an S3 bucket. There is a default dashboard and you can create your own custom dashboards.

Available metrics:

- Summary metrics. Storage bytes, object count

- Cost-optimisation metrics. Non-current version storage bytes, incomplete multi part upload storage bytes.

- Data protection metrics. Version-enabled bucket count, MFA enabled bucket count, KMS-enabled bucket count, cross-region replication rule count.

- Access management metrics. Object ownership bucket owner enforce bucket count.

- Event metrics. Insights for S3 Event notifications, number of buckets with S3 events enabled.

- Performance metrics. Transfer acceleration enabled bucket count.

- Activity metrics. Number of requests, split get get vs put vs list etc, bytes downloaded.

- Status code metrics. Count of 200 status codes, 403, 404 etc.

Some metrics are free and some are paid. Under the free tier, metrics are available for 14 days. For paid, it is available for 15 months and automatically published to CloudWatch.

11. S3 Security

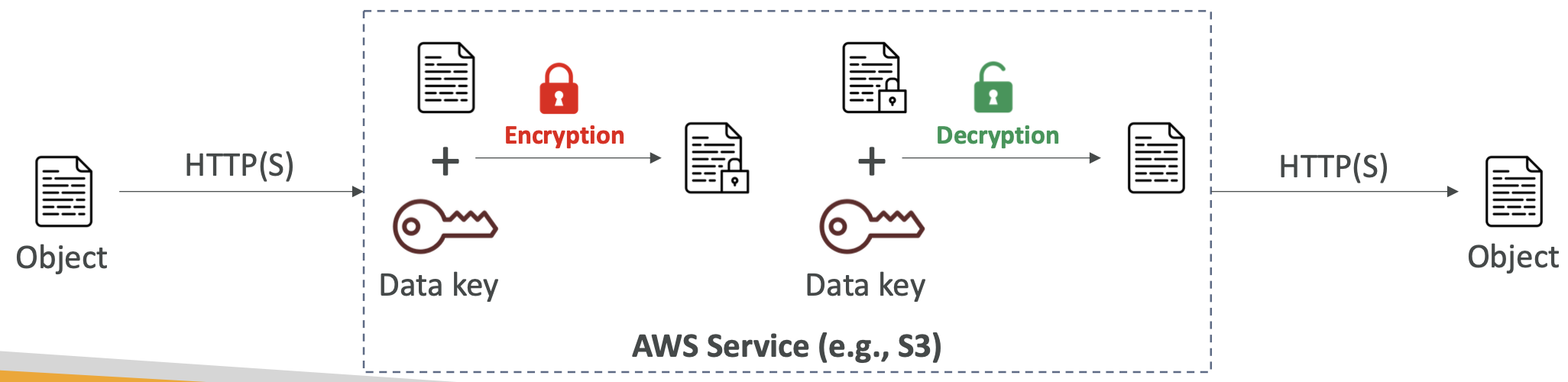

11.1. Object Encryption

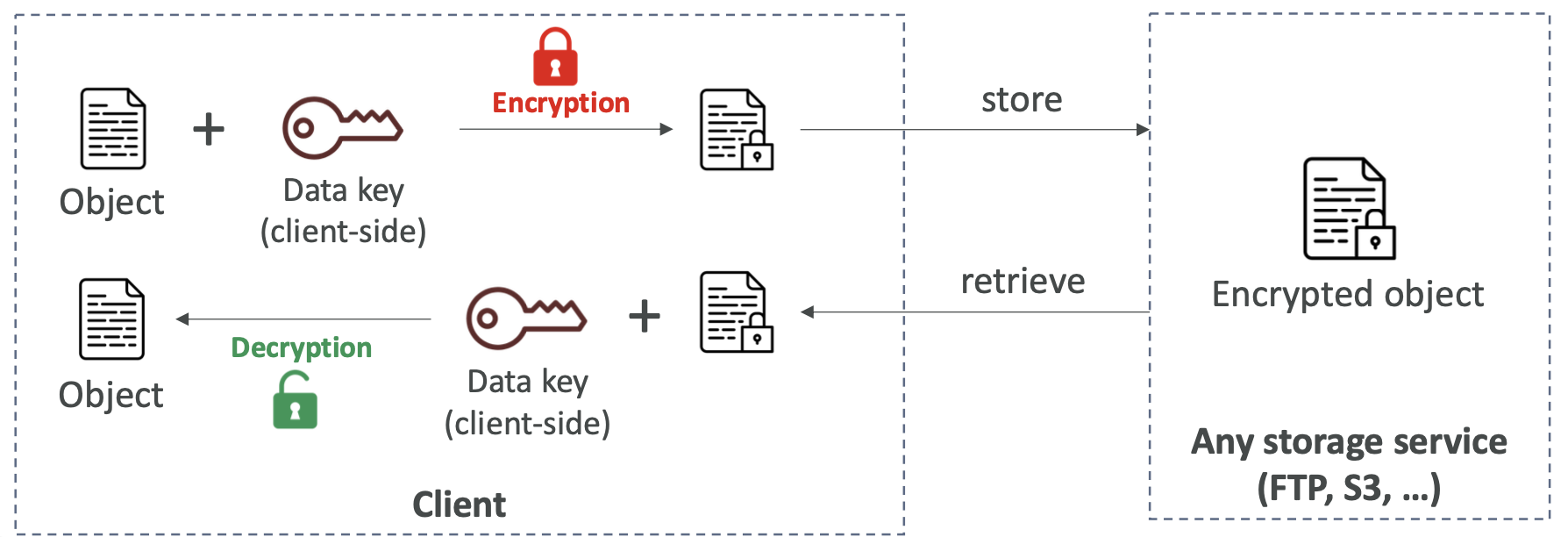

Object encryption can either be server-side or client-side.

- Server-Side Encryption (SSE). Can manage keys using S3-managed keys (SSE-S3), KMS (SSE-KMS) or customer-provided keys (SSE-C).

- Client-Side Encryption.

SSE-S3 is enabled by default for new buckets and objects. SSE-KMS allows usage statistics of keys to be tracked using CloudTrail. Each write and read using these keys will could towards your KMS APi usage. This can result in throttling if uploading lots of data. SSE-C requires the key to be provided in the header directly when uploading or downloading data. You can only use this from the CLI, not from the AWS console UI.

The option used is specified in the header when uploading the object.

For client-side encryption, the customer manages the keys and encryption themselves. Client must encrypt data before sending it to S3 and decrypt data when retrieving it. You handle this yourself and don’t need to specify this in the S3 encryption settings.

Encryption in transit is also called SSL/TLS. Amazon S3 exposes two endpoints: HTTPS which is encrypted in transit and HTTP which is not. HTTPS is recommended.

Encryption in transit and/or server-side encryption can be enforced using a bucket policy. Bucket policies are evaluated before “Default Encryption“ settings for the bucket.

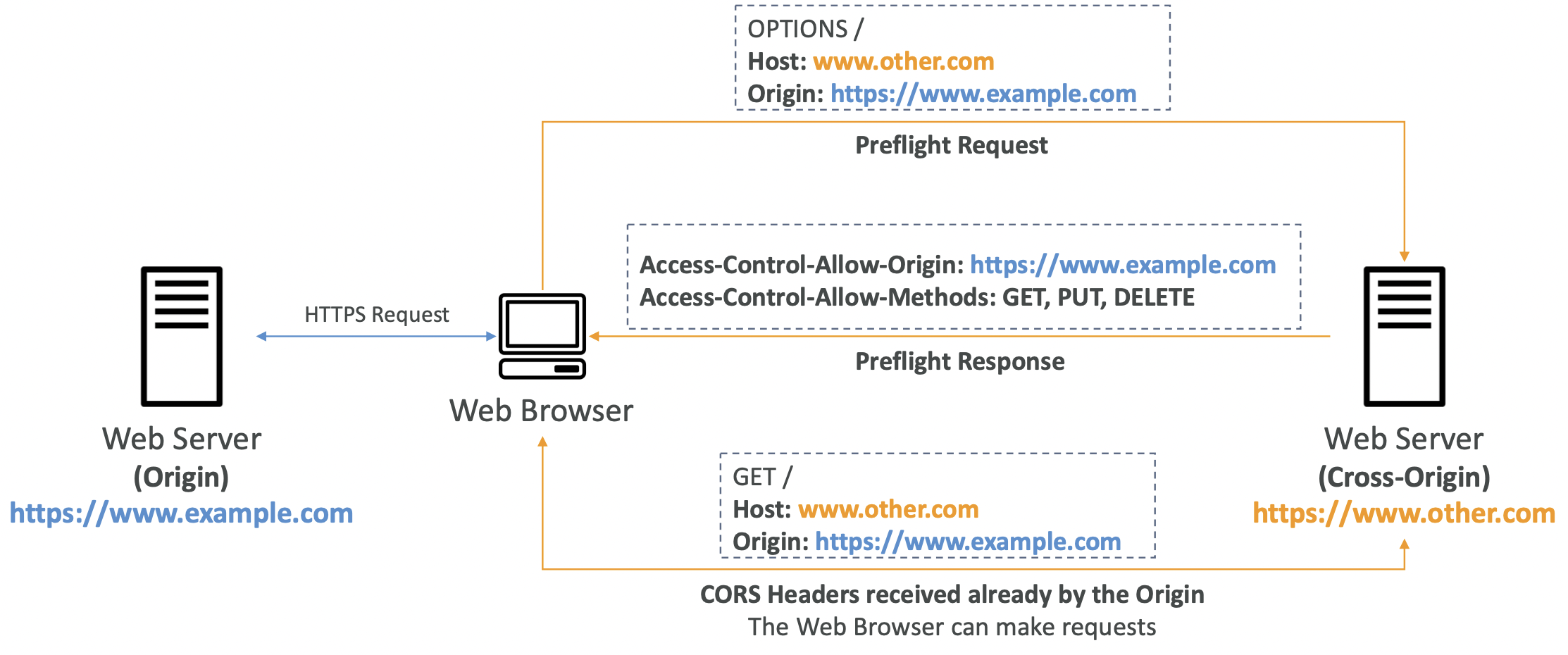

11.2. CORS

Cross-Origin Resource Sharing (CORS).

Origin = scheme (protocol) + host (domain) + portUsing https://www.example.com The port is implied by HTTPS (443) or HTTP (80). The scheme is www and the host is example.com

So these have the same origin:

- http://www.example.com/app1

- http://www.example.com/app2

These have different origins:

- http://www.example.com

- http://api.example.com

CORS is a web-browser based mechanism to allow requests to other origins while visiting the main origin. The other origin must allow for requests using CORS Headers.

We make a request to a web server, and it routes us to another server in a different region, for example to retrieve some images on the page.

On S3, if a client makes a cross-origin request on our S3 bucket, we need to enable the correct CORS headers. For example, if we are hosting a static website on S3 which contains images stored on a different S3 bucket; the image bucket must have the correct CORS headers enabled to fulfil the request. You can allow a specific region or * for any region.

11.3. MFA Delete

We can force users to authenticate with MFA when doing potentially destructive actions on the bucket, e.g. permanently deleting an object version or SuspendVersioning on the bucket. Only the bucket owner (root account) can enable/disable MFA Delete.

MFA won’t be required to enable versioning or list deleted versions.

11.4 S3 Access Logs

For audit purposes, you can log access requests to a particular S3 bucket, whether authorised or denied.

The logs are written to another S3 bucket; the target logging bucket must be in the same region. Never set the logging bucket to be the monitoring bucket! Otherwise you will create an infinite loop and rack up AWS costs.

11.5. Pre-signed URL

Users given a pre-signed URL inherit the permissions of the user that generated it for GET/PUT requests.

They can be generated in the AWS console, CLU or SDK. The expiration time can be 1 minute to 168 hours.

Within S3 console -> Find the object -> Object actions -> Share with pre-signed URL11.6. S3 Glacier Vault Lock

This allows us to adopt a Write Once Read Many (WORM) model, which is helpful for compliant and data retention.

We create a Vault Lock Policy, which means the object cannot be deleted by anyone, and the policy itself cannot be edited.

11.7. S3 Object Lock

S3 Object Lock is a similar idea but less restrictive. Again you can adopt a WORM model, but it is for specific objects for a specified amount of time. So you can block object deletion. The retention period must be set when creating the lock.

There are two retention modes:

- Compliance. Object versions cannot be overwritten or deleted by anyone user, even the root user. The retention modes cannot be edited once set. This is similar to vault lock and is the strictest setting.

- Governance. Most users cannot overwrite or delete objects or change the lock settings, but some users have special permissions to override this.

A third option is legal hold which protects an object indefinitely, independent of the retention period. Only users with the s3:PutObjectLegalHold IAM permission can add or remove legal holds on objects.

11.8. S3 Access Points

This is a more granular control over permissions at the prefix level rather than the bucket policy.

Say we have a bucket which has folders for multiple departments: finance, sales, analytics. We want to make sure each department can only access their folder. We could define a complicated bucket policy to enforce this.

A convenient alternative is to define a Finance Access Point for that folder, a Sales Access Point for that folder, etc.

Each access policy looks similar to a bucket policy — it is a JSON document with the same keys — but it applies to a prefix. Each access point has its own DNS name and access point policy.

We can restrict the access point to only be accessible from within the VPC. You must create a VPC Endpoint to connect to the Access Point, and define a VPC Endpoint Policy that allows access to the Access Point and target bucket.

11.9. S3 Object Lambda

This allows us to transform an object as it is loaded.

Say you want multiple versions of a file: the original, a redacted version for customers, an enriched version for the sales department.

We could store 3 different variations of each file. But this is an inefficient use of storage.

We can create a lambda function for each transformation and apply it at read time before it reaches the user. Only one S3 bucket is needed, on which we create an S3 Access Point and multiple S3 Object Lambda Access Points.

12. CloudFront

12.1 CloudFront Overview

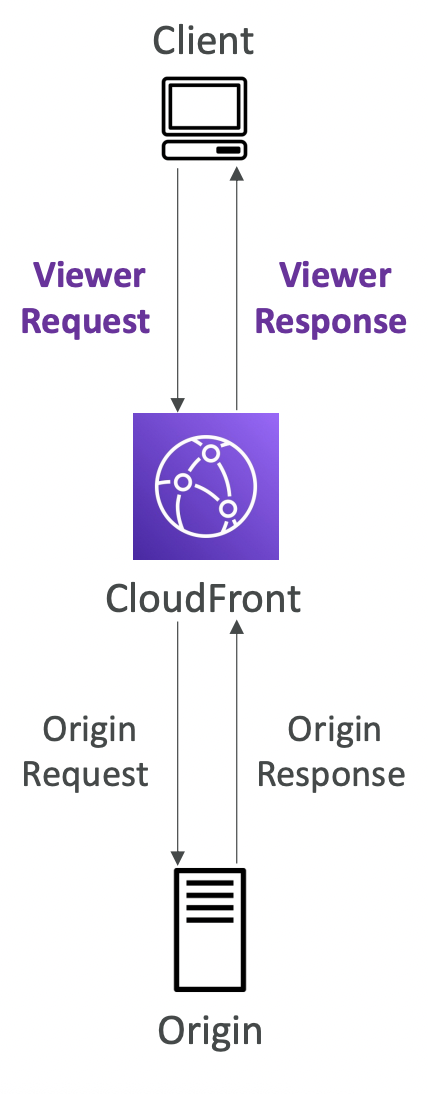

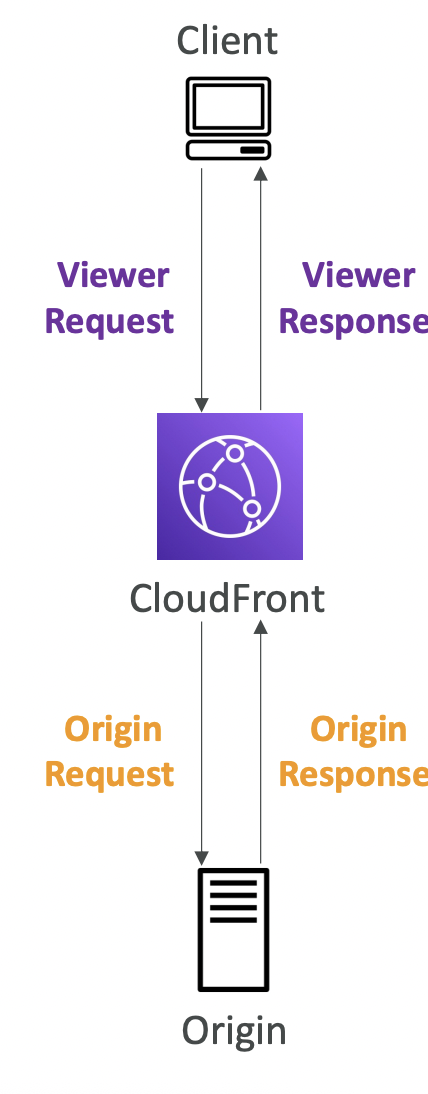

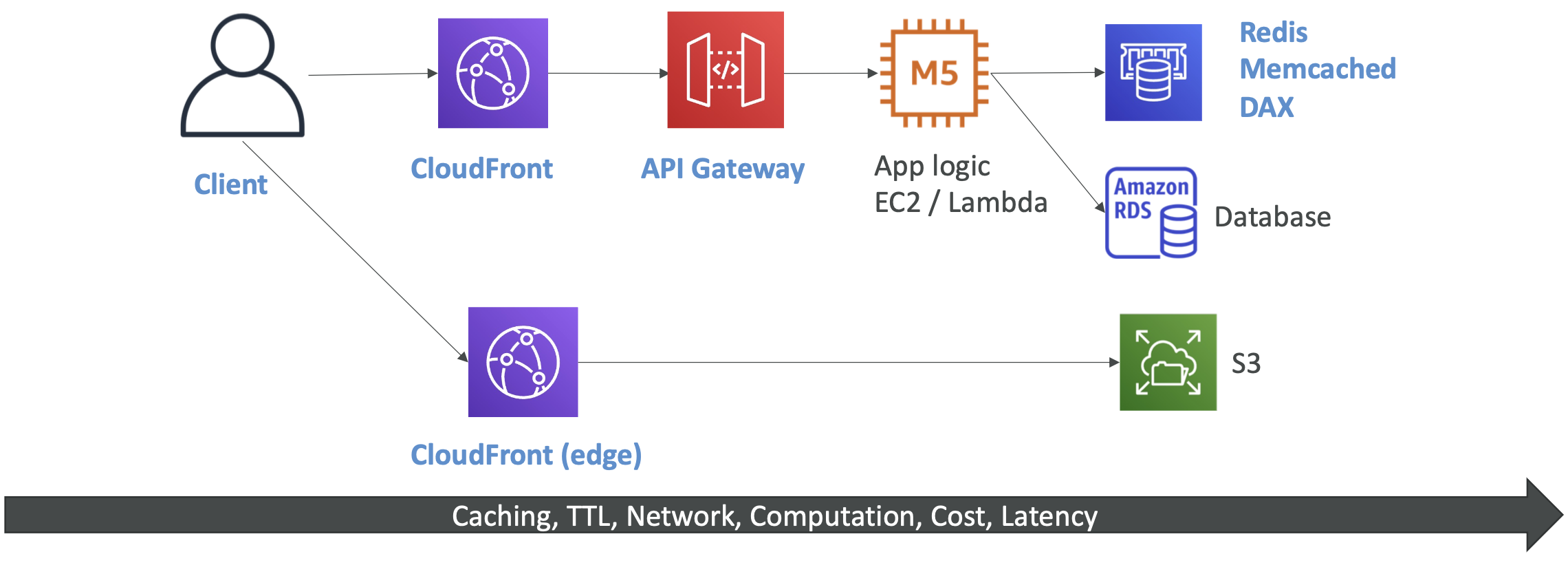

CloudFront is a Content Delivery Network (CDN). It improves read performance by caching content at the edges, at 216 points of presence globally. I this protects against DDoS attacks.

12.2. Origin

CloudFront can have different origins:

- S3 bucket. For distributing files and caching them at the edge. Secured using Origin Access Control (OAC).

- VPC Origin. For applications hosted in VPC private subnets - ALB, EC2 instances. CloudFront creates a VPC Origin inside the VPC and communicates with that. The old deprecated method was to create a public EC2 instance or ALB and attach a security group that only allowed access from the public IP addresses of the edge location; this is more error prone and less secure.

- Custom origin. Any public HTTP backend.

The client requests data from the edge location. If the data is cached, return it. Otherwise, fetch it from the origin and cache it at that edge location ready for any future requests.

CloudFront differs from S3 Cross-Region Replication, but naively seems similar in principle. CloudFront caches across the global edge network (there is no region selection) and only caches files for the TTL (short lived). Good for static content that must be highly available.

S3 Cross-Region Replication must be set up for each region you want replication to happen in, and files are updated in near real-time. Good for dynamic content that needs to be available in a small number of regions at low latency.

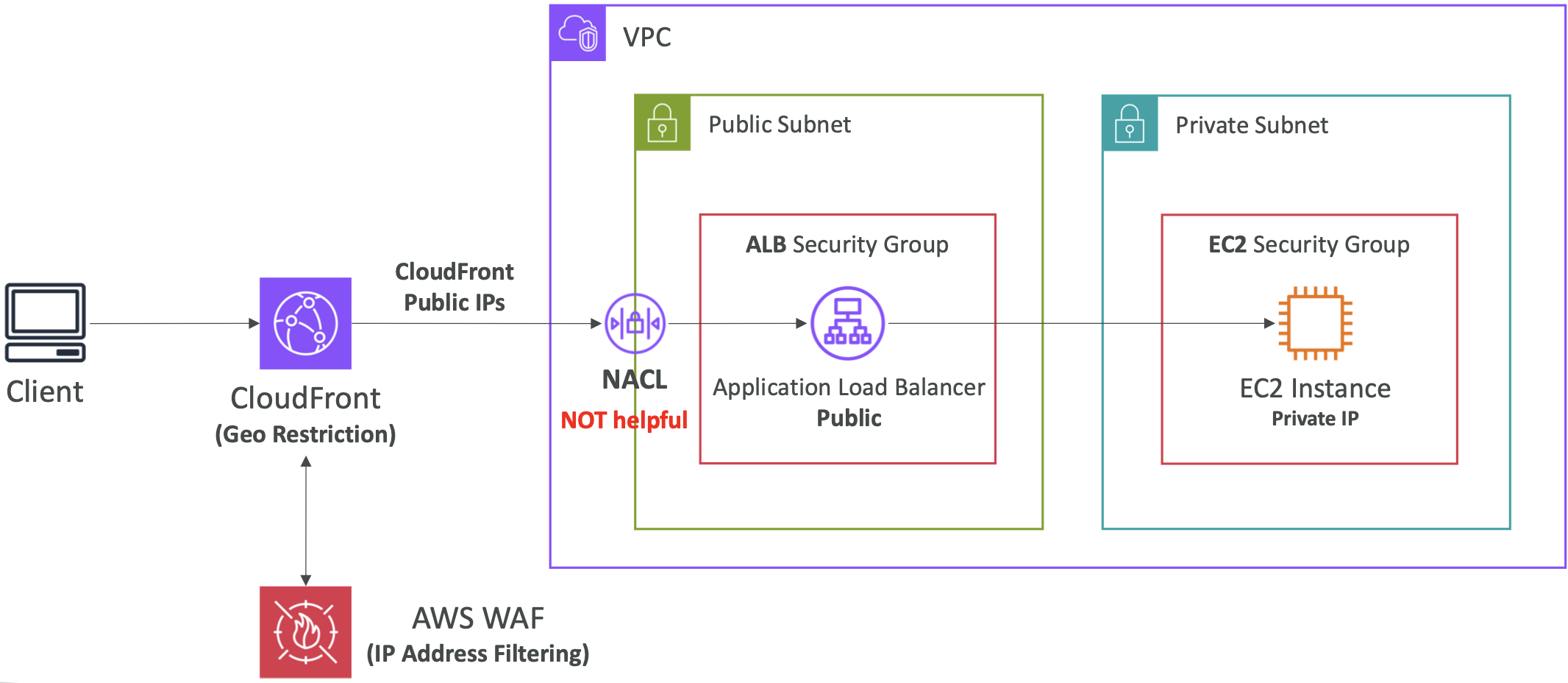

12.3. CloudFront Geo Restriction

You can restrict who can access your distribution based on location. You define an Allowlist or Blocklist to allow/block access to content is the user is in a particular country. The country is determined using a 3rd party Geo-IP database.

A use case is to enforce copyright laws based on country.

12.4. Price Classes

The cost of data out per edge location varies.

You can reduce the number of edge locations for cost reduction. There are three price classes:

- Price Class All. All regions, best performance but most expensive.

- Price Class 200. Most regions but excluding the most expensive.

- Price Class 100. Only the cheapest regions.

12.5. Cache Invalidation

If you update the content on the backend origin, the CloudFront edge location will only get the refreshed content after the TTL expires.

You can force an entire or partial cache refresh by performing a CloudFront Invalidation. You pass in a file path. This can be all files * or a specific folder /images/*.

It essentially tells the edge location that the content isn’t there, so the next user that requests the data will get a cache miss and go to the origin. Note that it doesn’t refresh the data per se, it invalidates the data and it’s only when the next user makes a request that the edge location retrieves the updated content.

12.6 AWS Global Accelerator

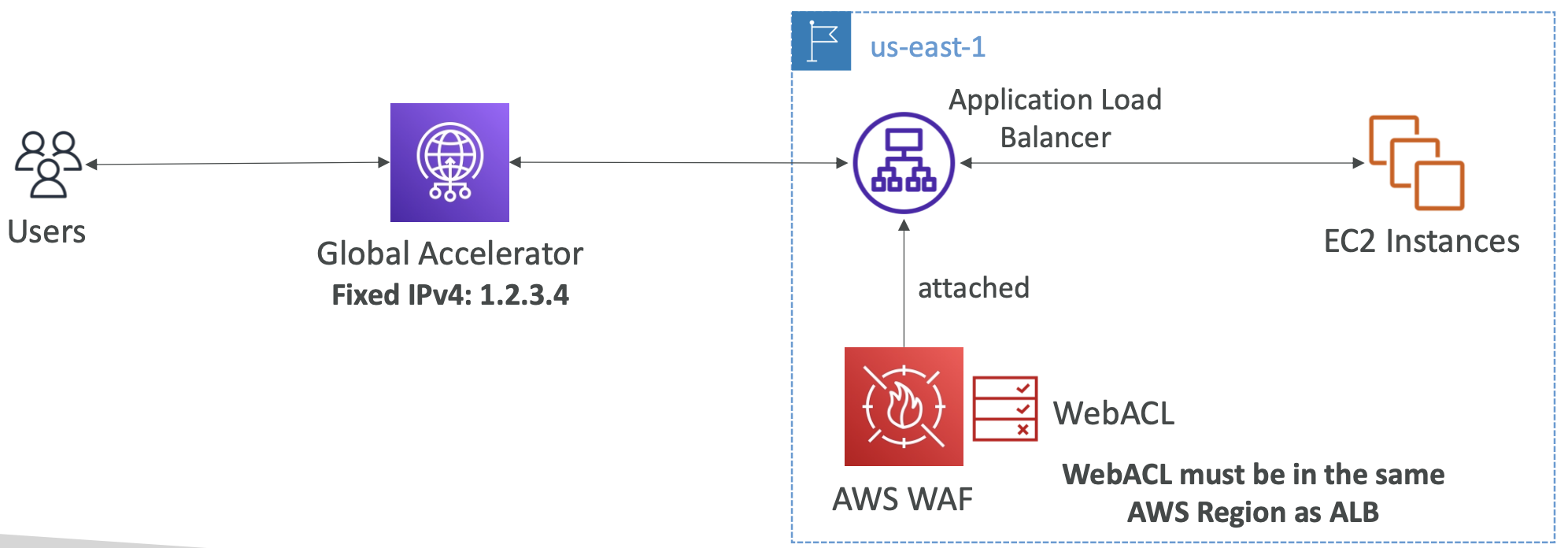

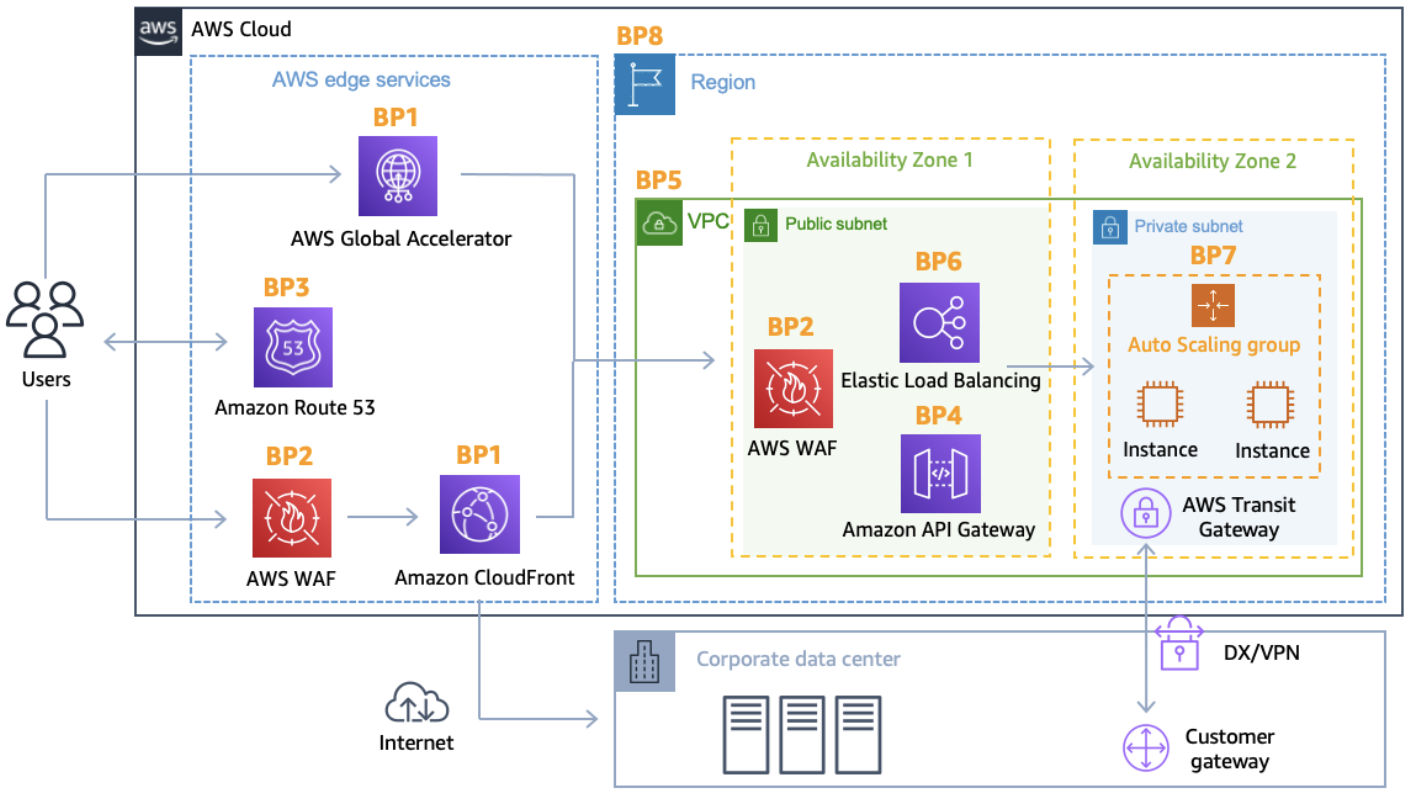

The problem AWS Global Accelerator is solving is you have an application deployed in one region, say an ALB, but your traffic is global. So some users in faraway locations have many hops before reaching our content. We want to go through the AWS network as soon as possible to minimise latency.

12.6.1. Unicast IP vs Anycast IP

Unicast is what we’re typically familiar with. Each server holds one IP address.